ViRGE used to be a popular graphics chip in the very early era of video/3D acceleration (1996-1997). However, the 3D marked evolved quickly and the ViRGE-series chips moved into the entry/budget segment of 3D (it was said that a 3D accelerator became obsolete in just six months from release). Modern vintage computer collectors often call it a “decelerator”. Some even say that nobody could play accelerated 3D games on these cards and it is the worst 3D accelerator ever.

I totally disagree with them and this page will show you my rather controversial perspective on this topic. There are multiple reasons, why the ViRGE-series chips have so bad reputation. Modern Youtubers often test them with games from 1999, 3D Mark 99 and similar benchmarks, which obviously results in many visual artifact (caused by missing features) and miserable performance. For some, this is enough to say that the chip was bad.

Diamond Stealth 3D 2000 4MB / S3 Virge 325 (source: vgamuseum.com)

Another reason is related to the fact that performance of different OEM cards with these chips can vary a lot. There can be two cards with the same chip name, where one of the cards provides twice as much performance as the other one. I will elaborate on this as well.

My perspective on early consumer PC accelerators is altered by my knowledge of professional 3D workstation market. The 3D acceleration took off in the 1980s – at least a decade before we saw consumer 3D accelerators for PCs. Friends of mine and I were able to benchmark raw performance of both professional 3D workstations (both UNIX and Windows) and early consumer cards to understand where early PC graphics chips stood (visit the GPUbench page).

Years ago, I made a video showing multiple old games running on a ViRGE series card. It is in Czech with English subtitles. I used a computer with a 1GHz Pentium III, but the card is pixel fill-rate limited all the time, so any Pentium II would provide the same results.

The 3D market in the times when S3 ViRGE was released

The original S3 ViRGE 325 was released in the first half of 1996. It is good to remind what consumer 3D accelerators were available at the time. Matrox Millenium (MGA-2064W) came in the middle of 1995. It had a very primitive OpenGL support in Windows NT and was able to accelerate drawing of smooth-shaded polygons with no additional effects (no alpha-blending, no texturing). Three other cards came by the end of 1995 – Paradise Tasmania 3D (with a Yamaha chip, a proprietary API and two games that ever used it), Creative 3D Blaster VLB (VL-bus 3D-only accelerator with a stripped-down 3Dlabs chip) and NVIDIA NV1 (an odd integrated 2D/3D/VGA/sound card which worked with quadratic texture mapping instead of triangles).

Only the 3D Blaster was a somewhat capable 3D accelerator. It was faster than ViRGE, but with less functions (no texture filtering, crude perspective correction, supposedly no blending). It was also doomed by being released at the time when mainboards switched to PCI bus. There were also expensive professional OpenGL accelerators for PCs from 3D Labs (with prices around $3,000), but that was all.

S3 ViRGE 325 was ready by the end of 1995. Developers already had card samples modifying their games for the proprietary S3D API. However, there was a decision to wait for Microsoft and the first Direct3D. That happened in April 1996 and S3 ViRGE 325 was one of few cards supporting it from day one. The other card was ATI 3D Rage and had the same timing strategy.

Both the ViRGE 325 and 3D Rage supports bilinear texture filtering and true alpha-blending. Both were cheap and both had well-supported 2D/VGA engine and video acceleration integrated in the chip. If you wanted to buy a Direct3D-compatible (DirectX 2.0) consumer video card in the mid-1996, these were the best choices. The ViRGE 325 has about the same performance as the ATI 3D Rage. On the other side, the 3D Rage does not have a Direct3D compatible Z-Buffer, which means that only the games that support polygon sorting can work (if a scene is properly designed with all polygons sorted by CPU and drawn from far to near, you can render the 3D scene without using the Z-Buffer). There were many compatibility issues with 3D Rage even in early Direct3D games, so the ViRGE 325 was a better choice after all (if you were interested in the 3D part of the chips).

All the better 3D accelerators were released at least half a year later. The all-mighty 3Dfx Voodoo Graphics was released by the end of 1996.

What was wrong with ViRGE?

It was an early technology done on budget. I would say that all the budget-level 2D/3D accelerators from 1996 soon became bad. They aged quickly because of the $300 3Dfx Voodoo Graphics board. 3Dfx had clear vision of how should have 3D gamming looked like. They came from hi-end graphics supercomputers (mostly Silicon Graphics), so they went through all this already five years ago. They understood what features and what level of graphics performance was required to create a successful gamming accelerator, skipping all the functions not necessary for games.

S3 like many other vendors wanted a universal 3D solution – cheap, with many functions. ViRGE 325 is actually pretty fast in drawing non-textured smooth-shaded polygons. It can render one pixel per chip clock. It takes two chip clock cycles to do the same with the Z-Buffer enabled. There is some overhead caused by handling the command queue, swapping buffers and other things, but a 55-MHz ViRGE 325 can render up to 44Mpix/s (million pixels per second) on non-textured polygons without Z-Buffer. Enabling Z-Buffer decreases the performance to 23Mpix/s, which is still good among 1996 cards (30% slower than Matrox Millennium, but faster than 3D Labs Permedia/GLiNT chips or ATI 3D Rage II).

S3 considered the non-textured performance important (presumably thinking about VRML and 3D visualization). They underestimated the importance of fast texture mapping. In 1995/1996, it was not uncommon to have 30-50% of the game screen filled with polygons without textures (e.g. sky, fog), but this changed very soon. Unlike nearly all later 3D accelerators, ViRGE 325 (and all subsequent ViRGE chips) does not have any texture cache (not even a small one), which resulted in a big performance hit on polygons involving textures (especially when bilinear filtering is enabled).

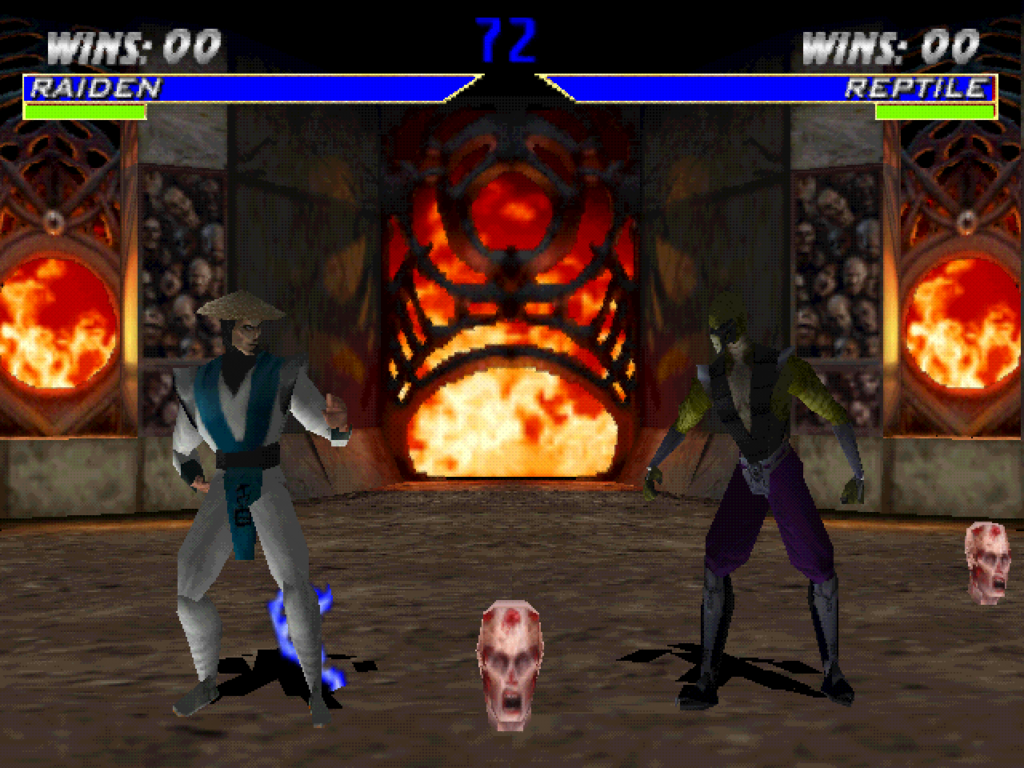

Mortal Kombat 4 (1997) does not use any non-textured polygons or 2D elements in the scene and works in a resolution of 640×480 (with no option to change it). Thus, it is quite pixel fill-rate demanding for very early 3D accelerators.

ViRGE 325 / VX rasterizer speed (no texturing):

- Smooth-shaded polygons (base) = 1 cycle/pixel

- Added alpha-blending = ~+1 cycles/pixel

- Added Z-Buffer = ~+1 cycle/pixel

The numbers above mean, that a scene with smooth-shaded semi-transparent Z-Buffered polygons will be drawn with the speed of three chip clock cycles per pixel (55MHz ViRGE 325 = ~14Mpix/s… that is comparable to a low-end workstations like SGI Indy XL/XGE sold at the same time).

ViRGE 325 / VX rasterizer speed (perspective-correct texturing):

- Bilinear filtered texture = ~+7 cycles/pixel

- Point-sampled (non-filtered) texture = ~+5 cycles/pixel

Based on this, you need 9 cycles/pixel to draw a smooth-shaded Z-Buffered polygon with a bilinear-filtered texture (=1+1+7). That means just ~5Mpix/s (or ~6Mpix/s without filtering).

With just 5Mpix/s, you can be sure that such a chip is not able to accelerate a fully textured game in 640×480 (sadly, early games often use fixed 640×480 when running the S3 accelerated version). One frame in this resolution is 0.3Mpix. If we assume that each pixel on the screen is drawn just ~1.3x per frame, we can draw just 13 frames per second (=5/0.3/1.3).

The commonly used trick in very early 3D games was to have ~30-40% of screen non-textured. Even if you lose some performance due to fog calculation, it is still beneficial. Havoc can be a nice example:

If the textured Z-Buffered polygons are drawn at 5Mpix/s and non-textured non-Z-buffered sky is drawn at 44Mpix/s, covering 25% of the screen with solid-color sky increases the average pixel fill-rate to ~15Mpix/s. That is enough for 640×480 in 30 fps. Have you ever wondered why the old 3D games had so low viewing distance and everything was covered with fog? Well, this is one of the reasons.

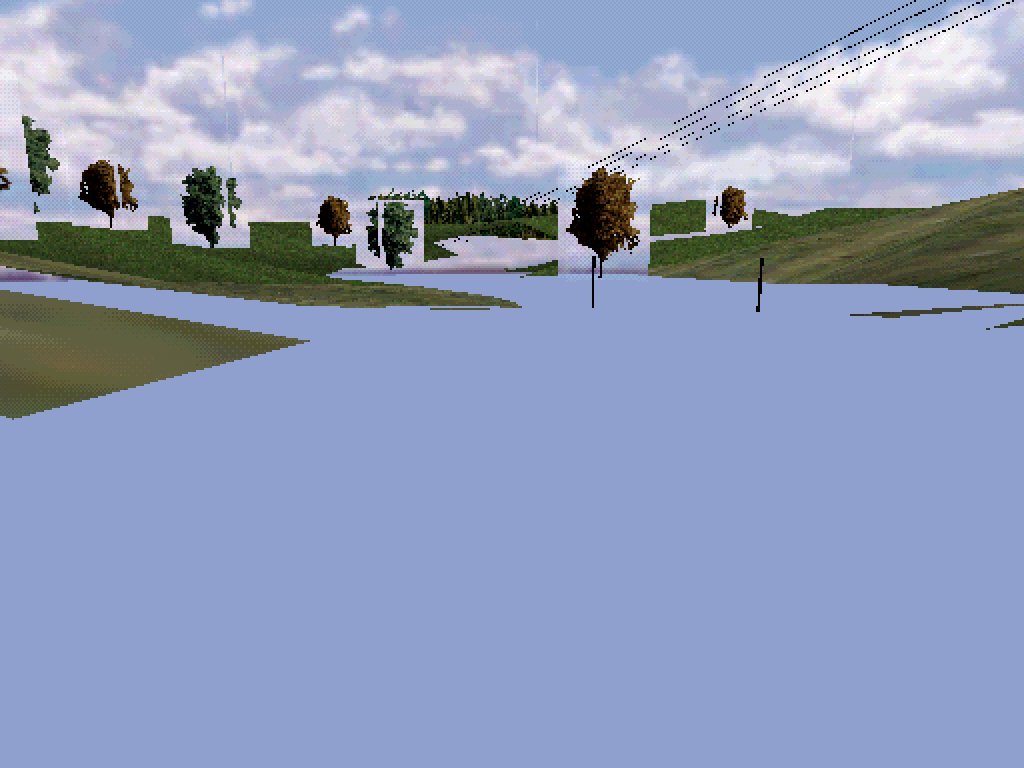

Another way to decrease size of the screen area filled with textured polygons was to make a 2D dashboard drawn outside the 3D pipeline. Try Monster Truck Madness – the cockpit view will give you significantly higher frame rates than the camera behind the car because the 3D viewport is smaller.

Monster Truck Madness (1996) is one of the early Direct3D games that decreases the 3D performance requirements by decreasing the size of a 3D viewport: (1) Each frame starts with the 3D rendering inside the wide rectangular area and it overdraws the previous frame, so you can see the truck’s 2D dashboard above and below it; (2) Once the 3D scene is rendered, the game uses 2D functions of the graphics hardware to draw the dashboard – partially even over the 3D viewport area.

Additional notes:

- This is just theoretical calculation. In real life, more frames cause more buffer swapping and more commands in the command queue per second, so the performance increase will be lower.

- The results are measured using 16-bit textures, because GPUbench is an OpenGL (1.1) benchmark and OpenGL primarily works with the texture color depth based on the screen color depth (unless you use OpenGL extensions). I assume that the performance with lower texture color depth can help but results from other benchmarks reveal that the difference will not be so big. Based on the ViRGE DX results, there is very little difference between 16bit and 24bit color depth performance.

- Absence of the texture cache causes that ViRGE cards are quite insensitive to texture resolution. This is different from chips like ATI Rage II, where a small texture cache could increase performance heavily, if you worked with the right size of textures and 4/8-bit texture palette modes.

Why ViRGE chips don’t like slow CPUs

Long time ago, I used to think that there is a clear distinction between 2D and 3D accelerators. To me, it was like that adding one special feature converted a 2D-only card into 3D accelerator. That is not how this works. There are multiple steps in the 3D pipeline and the chip manufacturer can decide, which steps will be hardware accelerated. The rest can be done in software (thus, by a CPU).

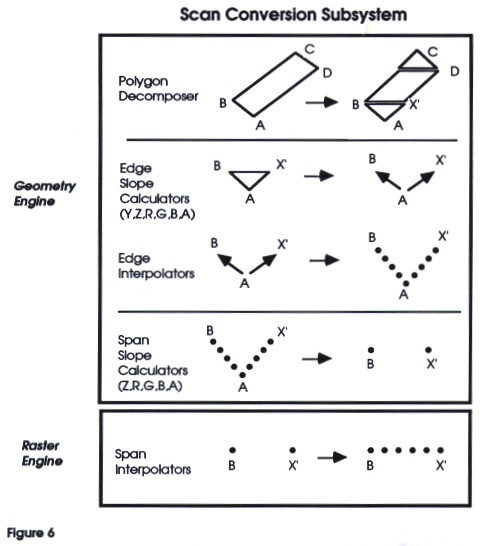

This is a simplified overview of what is necessary to do to draw a triangle in a primitive 3D pipeline:

- Geometry transformation: 3D world X/Y/Z coordinates of the vertices (defining the triangle) are transformed into a 2D X’/Y’/(Z’) projection on a screen (based on the camera position & angle in the 3D scene). X’ and Y’ define 2D position on the screen and Z’ defines distance between the camera and the vertex).

- Optional vertex lighting: Colors are calculated for each of the three vertices of the triangle (based on positions of spot/ambient lights in the 3D scene and light/surface parameters).

- Edge-slope calculations: Slope parameters are calculated for each dimension of each edge of the triangle. This contains not only X’/Y’/Z’ positions, but also R/G/B/A color & alpha (opacity) values if these change between vertices. If textures are involved, it is necessary to do the same for U/V texture wrapping coordinates (enabled texture perspective correction adds additional W parameter).

- Edge interpolation: The triangle is decomposed into spans (one-pixel-thin horizontal lines). Based on the vertex positions and already calculated slope parameters of edges between them, edge interpolation is used to define start and end X’/Y’/Z’ positions of each span on the screen.

- Span-slope calculations: Edge-slope parameters are then used to calculate R/G/B/A/U/V/W (color/alpha/texture) values for the starting points and slopes between the start and end of each span.

- Span interpolation: Spans are now drawn into framebuffer memory (color buffer and Z-buffer). R/G/B/A/U/V/W parameters for each pixel are interpolated based on the previously calculated span-slope parameters. If texturing is involved, U/V/W parameters define a texel position in the texture image. The final color value of each pixel is a combination of R/G/B color values and the texel color. If a new pixel is semi-transparent (A), it is necessary to look into the color buffer first, take the color value of the previously stored pixel at the same screen location and combine it with the new color value (based on the transparency settings). If Z (depth) testing is involved and the Z’ value of a new pixel is higher than the Z’ value of the one previously stored (at the same screen location), drawing of the new pixel can be completely skipped (Z testing conditions can be changed freely, but “less or equal” is the most common setup).

The first stages require complex calculations, but they are done on the per triangle basis. Calculations in later stages are becoming simpler, but they are involved more often (per span, per pixel). Affordable mid-1990s 3D accelerators could not do all the stages in hardware in a single chip. There were indeed fast 3D accelerators doing all of this, but those were multi-card professional solutions for UNIX workstations that cost tens of thousand dollars.

Creating a low-cost, budget-oriented 3D accelerator was a difficult task full of tradeoffs back then. The true minimalistic approach was chosen by SGI, when they worked on REX graphics chips in the early 90s (Indigo Entry, Indy XL/XGE “Newport”). The REX accelerated only the span interpolation (stage 6). CPU had to calculate all per triangle, per vertex and per slope parameters and the REX only did start-end span parameter interpolation and writing spans into framebuffer memory. Z-Buffer and Z testing were done in software, but there was a quick path designed, so that CPU could provide the bitmask for a span and the REX drew only the pixels that should be visible. Even this approach allowed 5-to-10-fold pixel fill-rate increase in comparison with software-only rendering.

ViRGE chips are not that simple. They do stages 4-6 in hardware, so the CPU calculates the 2D projection, vertex lighting and prepare edge-slope calculations. If stages 1 and 2 are done in hardware, people often say that the graphics card has a geometry unit (or a TnL unit; TnL = transform & lighting). The stage 3 is called triangle setup. ViRGE has neither a geometry unit nor a triangle setup unit.

It was a common practice not to have a triangle setup unit in 1996. ATI 3D Rage cards (before Rage Pro chips), 3D Labs Permedia (1) and other cards including the first 3Dfx Voodoo Graphics didn’t have it either. Even professional 3Dlabs graphics cards (300SX/500TX) have the triangle setup unit only optional as a separate chip (GLINT Delta).

Although the first 3Dfx Voodoo needed the triangle setup to be done in software, its routines were heavily optimized in the driver & Glide API. On the other side, the first versions of Direct3D were horrible mess (to that level, that the whole API was soon completely redesigned). The clean and efficient driver/API with low CPU overhead helped the Voodoo card to work even with ~100MHz Pentium CPUs (if we talk about early Glide games).

On the other side, good ViRGE boards (like a 74MHz STB Nitro 3D / ViRGE GX) are limited by a slow CPU in Direct3D so much, that a 133-MHz Pentium delivers halved framerate in comparison with a 166-MHz Pentium MMX (note that Pentium MMX has an advantage in the doubled L1 cache, so the difference is not only in the clock). I would say that 166- or 200-MHz Pentium MMX is the minimum for 3D gaming with S3 ViRGE chips.

Several (modern) web pages show ATI Rage II/II+DVD as a superior chip to S3 ViRGE DX/GX. It is good to add that they’ve benchmarked the cards on a newer PC with powerful Pentium III CPUs (or better). However, ATI’s pre-Rage Pro chips behave badly when combined with slow Pentium I CPUs due to large CPU overhead caused by the ATI driver. In a 150-MHz Pentium system, you would get nearly twice as much performance from a ViRGE DX/GX than from a Rage II+DVD, although the chips should be comparable on machines with faster CPUs (Rage I/II should be combined with at least Pentium II).

Thus, if you are looking for a 3D accelerator for your early Pentium I machine, you should rather choose something with a triangle setup unit (like Matrox Millennium II, 3Dlabs Permedia 2, ATI Rage Pro) or 3Dfx Voodoo Graphics (if you focus on Glide API games). The absolute best choice is 3Dfx Voodoo2 – it allows you to get the best framerates with these slow CPUs.

ViRGE DX & GX

Half a year after the first S3 ViRGE 325, improved ViRGE DX and GX chips were released. Feature-wise, the original ViRGE was very good – it supported high-quality bilinear and trilinear texture filtering and true alpha blending. Newer DX/GX chips didn’t add new features in 3D nor changed the 3D output quality. They just improved texturing performance resulting in faster trilinear filtering and minimized performance hit from the texture perspective correction. They also vastly improved the performance when textured polygons are drawn without texture filtering (“point sampled textures”).

The ViRGE DX/GX rasterizer speed is identical to ViRGE 325 if textures are not involved. On the other side, performance of an ultra-cheap 45-MHz ViRGE DX in textured games is comparable to 55-MHz ViRGE 325 if texture filtering is used. If not, the performance is 50% higher. You can compare the (perspective-corrected) texturing performance hit of the original and improved ViRGE core below:

ViRGE 325 / VX rasterizer texturing speed:

- Bilinear filtered texture = ~+7 cycles/pixel

- Point-sampled (non-filtered) texture = ~+5 cycles/pixel

ViRGE DX / GX (EDO) rasterizer texturing speed:

- Bilinear filtered texture = ~+4 cycles/pixel

- Point-sampled (non-filtered) texture = ~+2 cycles/pixel

There is very little difference between ViRGE DX and GX chips. The 3D core is exactly the same. GX just has a better memory controller that allows board manufacturers using synchronous memory chips (SDRAM/SGRAM) in addition to EDO RAM. Sadly, due to a missing texture cache, ViRGE chips are very inefficient in using synchronous memory chips. SDRAM/SGRAM-based ViRGE GX have 15-25% performance hit when drawing textured triangles in comparison with EDO-based ViRGE GX running at the same clock.

The best ViRGE DX/GX cards are running at 75MHz and you should look for those with EDO RAM. ViRGE DX and GX offer identical performance (per MHz) if they are equipped with the same type of memory. There are plenty of GX boards with EDO memory and these don’t have any advantage over DX (although GX models were perceived as something better back then).

Nightmare Creatures (1997) looks and runs perfectly on higher-clocked S3 ViRGE DX/GX cards at 512×384.

For comparison: this is the same game running accelerated on the Matrox Millennium II released in about the same time as the S3 ViRGE GX2.

Should it be called a “decelerator”?

At some point, every 3D accelerator becomes decelerator. It happens when a software solution becomes faster than the hardware-assisted one. It is true that there are games that run faster using a software renderer than with S3 ViRGE (DX/GX) once you have a Pentium II CPU. However, this comparison can be quite unfair. Typical example is GLQuake or Quake II. These games have extremely fast software renderers that are perfectly optimized for the design, features and precision required by the game. These renderers can be fast thanks to omitting features or precision that would not be beneficial in the game.

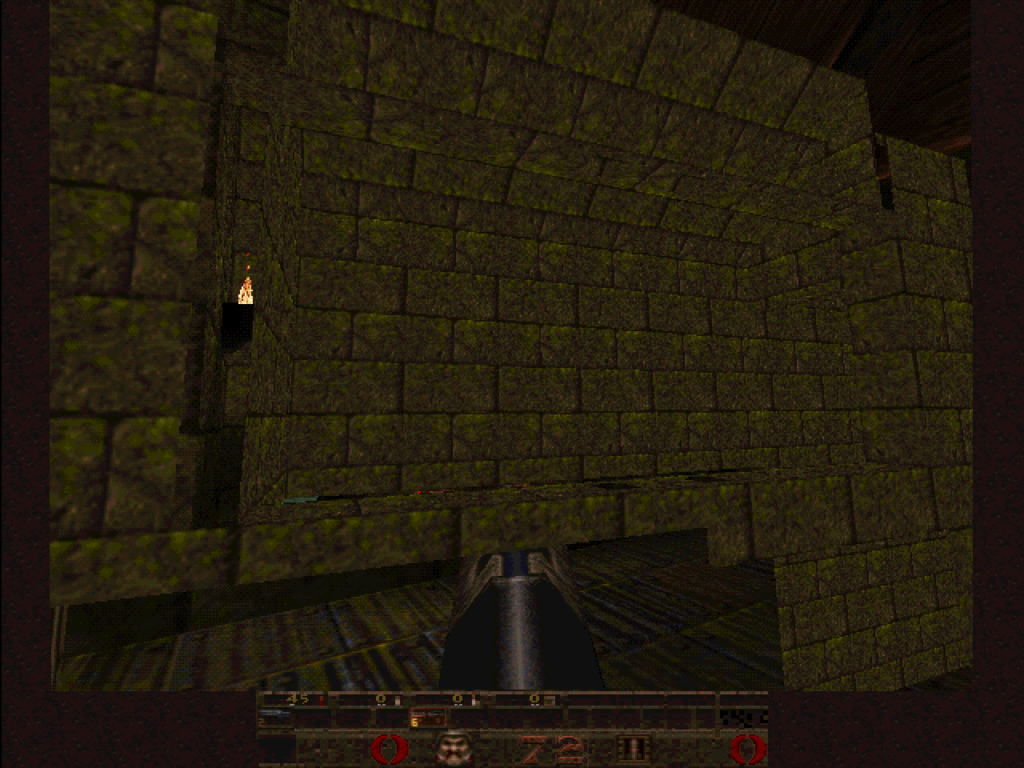

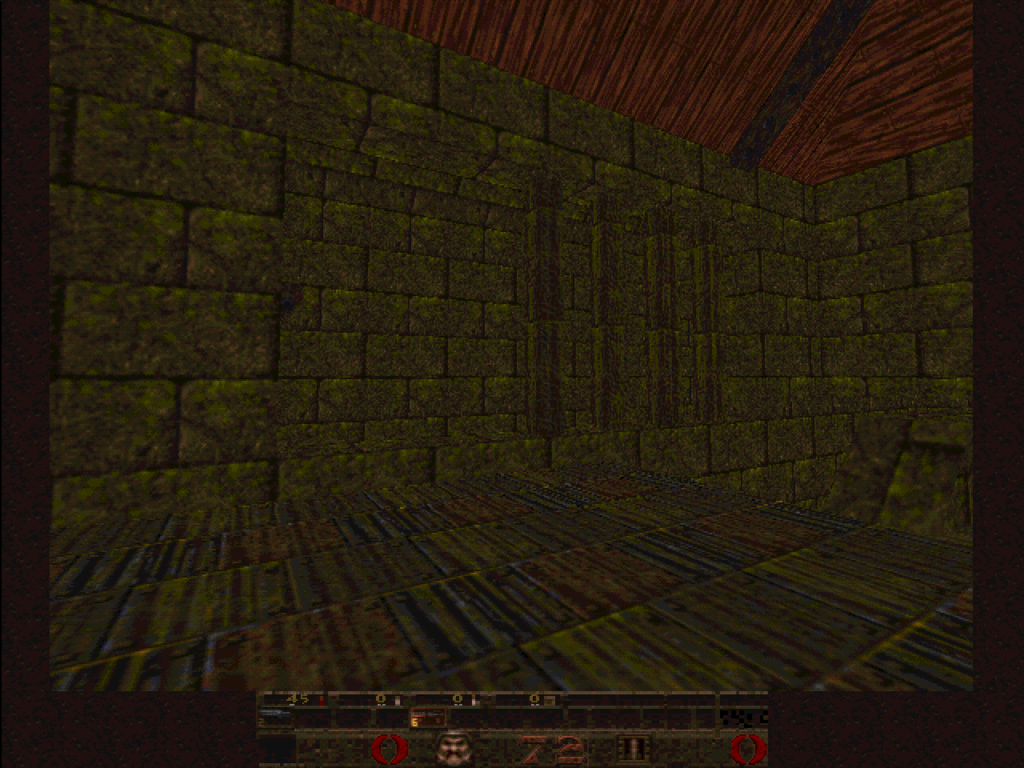

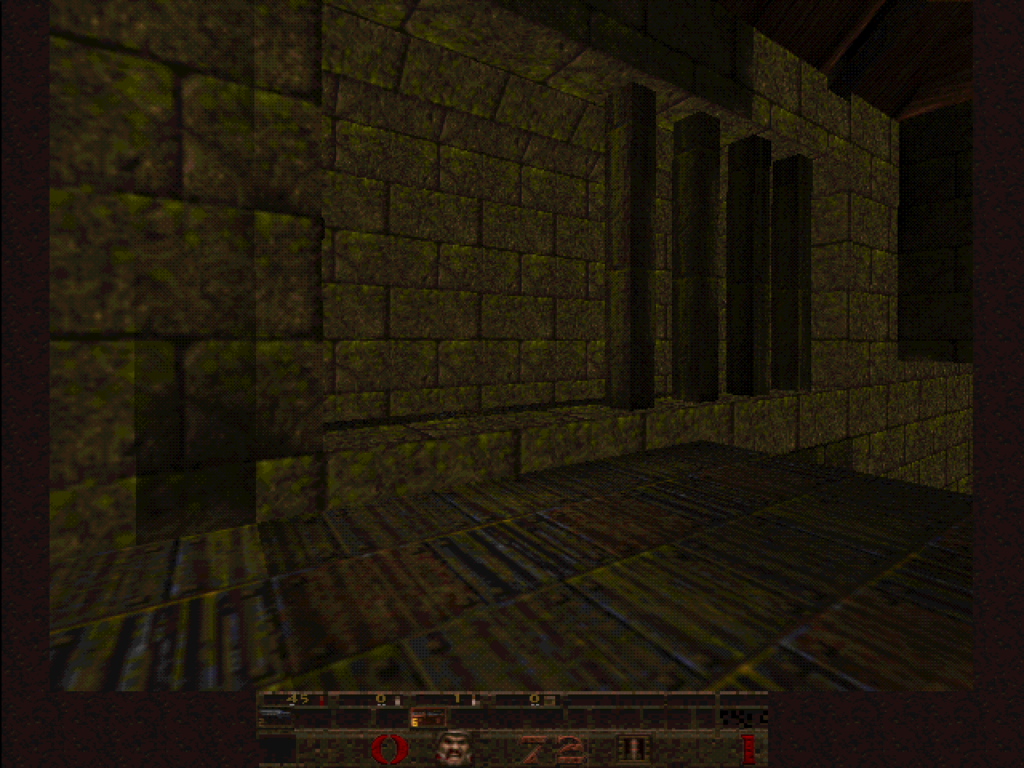

GLQuake (1997) is properly rendered with all effects on any ViRGE series card. However, the game is too demanding for these poor chips.

The series of screenshots shows how GLQuake renders the scene: (1) In the first pass, the game renders static scene (walls…) over the previous frame in the buffer; (2) The first pass is done and you can see that the scene does not have any lights and shadows, because GLQuake uses lights baked in separate texture maps; (3) Shadows are drawn in the second pass using alpha-blended polygons over the already rendered scene, which means that you need more than double pixel fill-rate compared to other games; (4) Once both rendering passes of the static scene are done, the game draws dynamic elements like enemies, pick-up items and moving walls and ends the rendering by drawing the player’s gun.

If you don’t believe me, just try to run these games using universal OpenGL software renderers. With bilinear texture filtering, you will get one frame per multiple seconds with Pentium II. The situation will be better with point sampled textures and the fast MMX-optimized OpenGL32.dll from SGI, but don’t expect performance near the internal Quake’s software renderer. You would need a 1-GHz Pentium III to match the speed of 75-MHz S3 ViRGE GX.

Moto Racer (1997) runs just fine in 512×384 on any higher-clocked ViRGE DX/GX. A 100-MHz ViRGE GX2 can run it in 640×480. To speed up the drawing of each frame, the background with sky is drawn using 2D functions, so these pixels are not processed by the 3D hardware.

Many early games didn’t allow a user to change texture filtering options and the bilinear filtering was always enabled with hardware 3D acceleration. Sadly, S3 did not include an option in the driver control panel to override this setting and force point-sampled textures. That would have allowed the desperate users to get 55-95% better framerates and increased usefulness of this graphics card later in its lifecycle.

A common issue resulting in broken effects was related to blending options. The chip does not support per-polygon transparency combined with alpha-blending defined by an alpha texture (a texture defining which pixels are opaque, transparent or semi-transparent) on a single triangle. If a game uses this combination, the chip skips alpha-blending on that polygon completely. This combination is not supported on many early graphics chips (even ATI Rage Pro), but they mostly skip only the per-polygon transparency (which usually does not affect gameplay). This is another issue that could have been worked around in drivers.

ViRGE does not support additive blending used in more modern games and ignores it – check HUD and the red light corona (Aliens vs. Predator, 1999)

Lens flare caused by the Sun is done using an unsupported transparency mode (additive? multiplicative?) which is incorrectly interpreted by the ViRGE as subtractive blending (Rollcage, 1999)

Not all transparency glitches are caused by the above. ViRGE insists on blending triangles with alpha textures smoothly into what is behind them (by default) even if the transparency mask has 1-bit per pixel. Many other cards, however, don’t draw any semi-transparent pixels in this case. Their result looks a bit worse, but such triangles don’t require to be sorted (transparent pixels were not written in the Z-Buffer). If a game expects such behavior and does not sort the alpha-blended triangles, the scene rendering is broken on S3 ViRGE:

Additional notes: Polygons with semitransparent pixels need to be sorted by the program and cannot rely solely on Z-Buffer. A typical behavior is to draw all standard polygons with Z-Buffer, then sort all semitransparent ones from far to near and draw them in second pass over the original scene. This is necessary to ensure that objects behind semitransparent polygons are properly drawn.

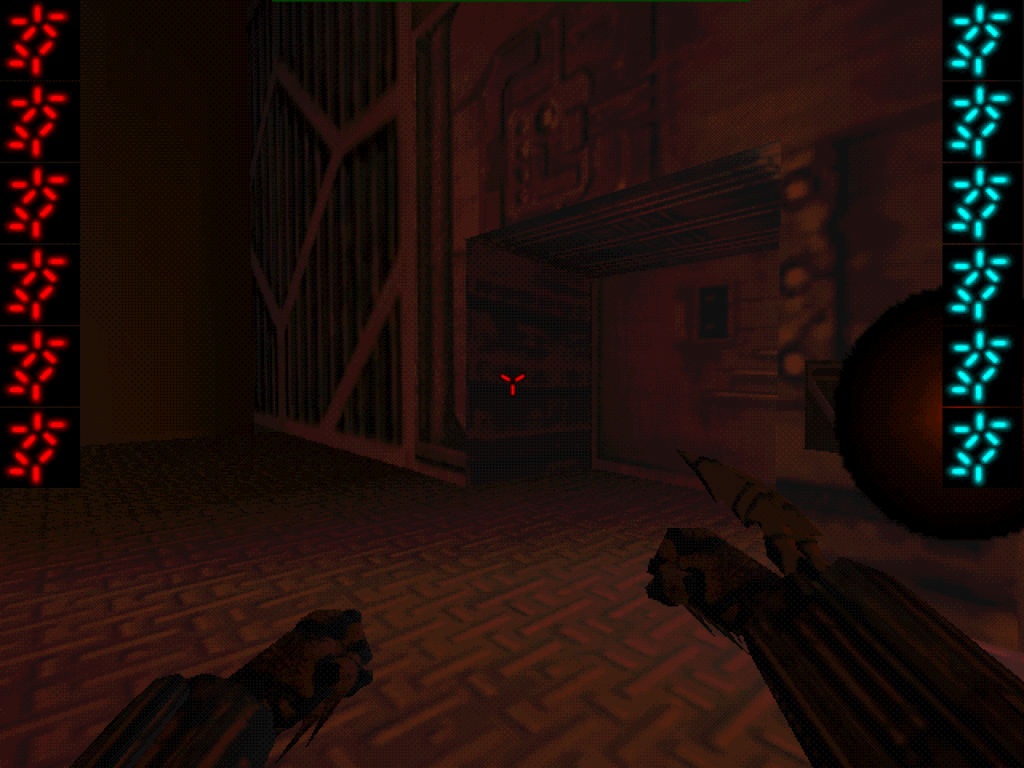

The series of screenshots shows how Viper Racing (1998) renders the scene: (1) Once the frame buffer is cleared with a solid blue color, the scene rendering starts with textured polygons of clouds; (2) Alpha-blended trees are rendered immediately after the sky; (3) The game proceeds to render the rest of environment but S3 ViRGE stored Z values of all pixels of the polygons with trees (even the fully-transparent ones), so Z-testing does not allow to properly render pixels of ground polygons behind the trees; (4) The scene is complete with the road and cars; (5) A closer look to the tree shows how ViRGE blends it into the sky – if the trees had been drawn at the end of the frame, the ViRGE would have allowed better picture quality than other cards (which do not draw semitransparent pixels when only a 1-bit alpha-mask is involved)

This is how the game should look like (rendered using 3Dfx Voodoo Graphics; sadly, captured using a phone). The card does not process the tree using alpha-blending and works with only fully-transparent pixels (which are not even written in the Z-Buffer) and fully-opaque pixel.

If the 1-bit alpha polygons are properly sorted, the scene can look better on the S3 ViRGE than on the 3Dfx Voodoo Graphics. Note the blending on the cage and text.

ViRGE VX: Why the slowest is also the most expensive

S3 ViRGE VX (988) is a misunderstood chip among many collectors. It was released along with cheaper ViRGE DX and GX by the end of 1996. It was more expensive than other ViRGE cards, but it didn’t share the 3D core improvements implemented in ViRGE DX/GX. Some expected better 3D performance thanks to the expensive VRAM modules, but the result was opposite.

ViRGE VX does have expensive VRAM and can have up to 8 MB of video memory (which was a lot in 1996). The VRAM memory, however, was not used to improve the 3D performance. In fact, the 3D core cannot benefit from the dual-ported VRAM architecture and these more complex memory modules often have slower access times, which can result in the necessity of decreasing the graphics chip’s clock.

The ViRGE VX chip typically works with frequencies no higher than 55MHz and it is not possible to overclock it, because the VRAM modules are not able to handle more. Given (nearly) the same 3D core from the original ViRGE, there was no reason to upgrade from ViRGE (325) to ViRGE VX regarding the 3D performance.

The real strength was somewhere else – the dual-ported VRAM provides two 64-bit data paths. One is used the standard way and the other one is used exclusively by the integrated RAMDAC. This allows to work in higher resolutions, with more colors and higher refresh rates. If you have some experience with hi-end 2D/CAD accelerators equipped with VRAMs, you presumably noticed that they have the same performance regardless the resolution. Cheaper DRAM-based cards, on the other side, are getting slower with increasing resolution as the bandwidth for RAMDAC is shared with other accesses to the video memory.

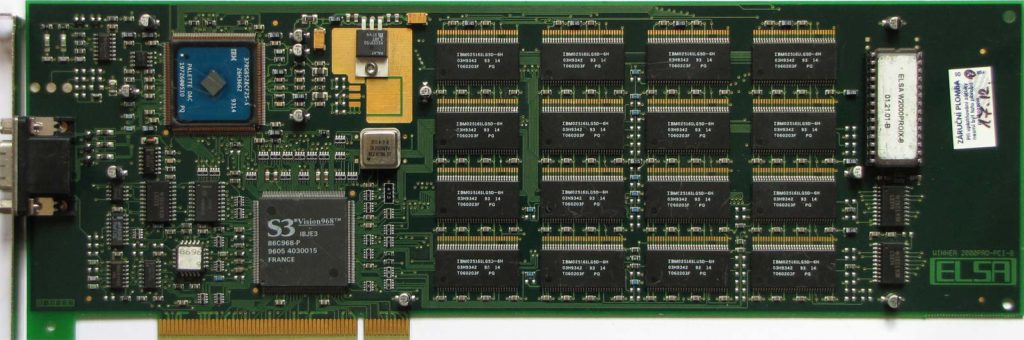

Thus, the true benefit of VRAM is visible only when the card is used with large hi-res monitors. ViRGE VX has been specifically designed to be used in high resolutions. The new integrated 220MHz RAMDAC allowed 1280×1024 in 120Hz and 1600×1200 in 81Hz – both in true-color if you had enough video memory installed. This was way above a typical ~$300 graphics card. In fact, ViRGE VX was the replacement for hi-end 2D/CAD cards like S3 Vision 968 that cost twice as much when released.

S3 Vision 968 is a direct predecessor of the S3 Virge VX 988 (source: vgamuseum.com)

ViRGE VX was a fast 2D/CAD accelerator with support for large hi-end monitors. It was not a good buy, when you looked for a fast 3D chip, but it was pretty cheap for what it offered in the large 2D/CAD segment.

ViRGE MX (for laptops) and GX2

The last ViRGE chips are called ViRGE GX2 and ViRGE MX (MX+). At the time when they were released (late 1997), they were primarily oriented at business/2D and video performance. Don’t expect any feature or performance improvements in the 3D core. The performance is identical to ViRGE GX with the same memory type. I am not sure if any vendor combined these with EDO RAM as I’ve seen only ones with synchronous memory chips.

Both MX and GX2 are among the faster ViRGE chips but not necessarily the fastest (due to the synchronous memory). MX is usually clocked at 83 MHz and GX2 at 75-100MHz (low-end vs. “hi-end” boards). You need about 85-93MHz to match the speed of the EDO-based 75-MHz ViRGE DX/GX. I am not sure about ViRGE MX overclocking, but even 75-MHz low-end GX2 boards are mostly equipped with memory that can handle 100MHz, so you can easily overclock the board to the maximum using tools like PowerStrip (the ViRGE chip and its memory always run at the same frequency). The best EDO-based ViRGE DX/GX cards like STB Nitro 3D can be overclocked to 83 MHz which makes them the fastest ViRGE cards for games (or anything else involving heavy texturing).

The core was enhanced with dual screen pipelines. This means that MX and GX2 can clone one screen to two display outputs that don’t have to share the same refresh rate. In case of GX2, you need a card that has composite or a S-Video TV output in addition to a standard VGA output to see any benefit from this feature. It allows you to clone one screen to both outputs without lowering the VGA’s refresh rate to the fixed NTSC/PAL 50/60Hz refresh rate of the TV output (the resolution is usually scaled down by an additional TV out chip).

The dual screen pipeline in the ViRGE MX chip allows displaying the same picture on the laptop’s internal LCD screen and external VGA monitor without forcing VGA CRT to run at 60Hz (this was not possible on any non-S3 laptop VGA controller before). When Windows 98 was released, it was possible to use the dual screen pipeline to display different picture on each output (“extended desktop”).

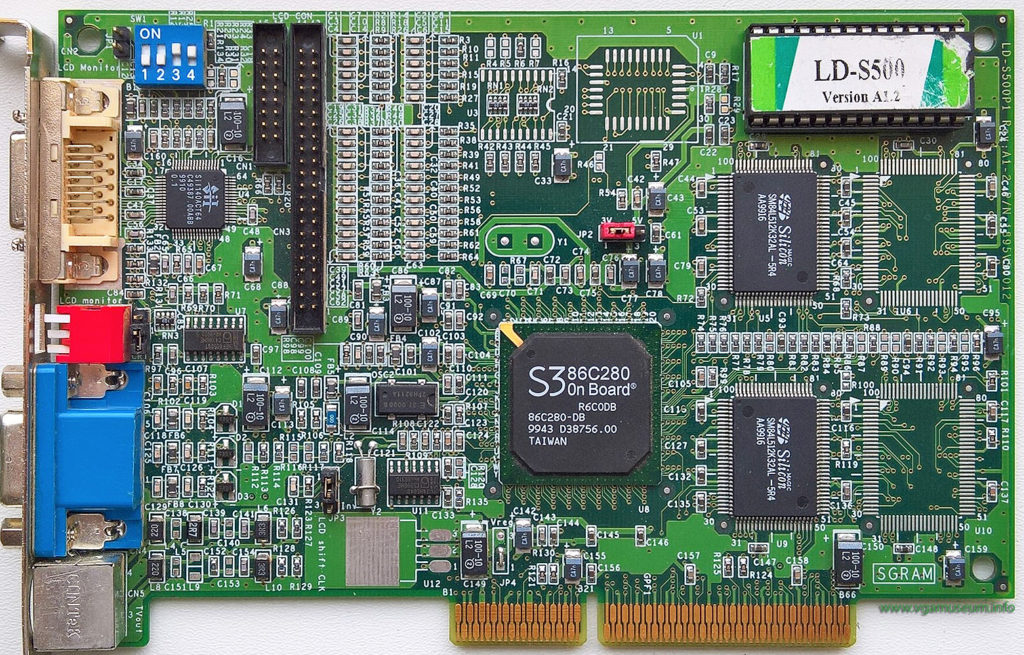

By using the laptop S3 chip on a desktop PC board, it is possible to drive a DFP-compliant LCD panel and use it with an analog VGA screen or TV in the extended desktop mode (source: vgamuseum).

ViRGE MX appeared not only in laptops. You can also find desktop PC boards equipped with a DFP digital output (a DVI predecessor) in addition to VGA. These boards can handle early desktop LCD screens with resolutions up to 1280×1024.

Interesting oddities

All ViRGE cards support a special feature that allows using Z-Buffer without allocating any additional memory for it. S3 calls it “MUX buffering” and it allows the chip to do multi-pass rendering, where the back buffer memory is used for Z-Buffer data during the first pass and then for color data in the second pass. This happens automatically when there is not enough memory for the Z-Buffer after allocating space for color buffers.

MUX buffering works only in 16-bit colors (Z-Buffer is always 16-bit, regardless the color depth). The last bit for each pixel tells if the stored data is a Z or color value. At the beginning of each frame, you can fill the back buffer with any color data (solid color, background bitmap, previous frame…). Then the chip starts the Z-Buffer pass where it draws all polygons in just Z (depth) values. All texture/color processing is skipped, so the Z-Buffer pass is very fast. Z values of new pixels are written into the back buffer if the original value is color data (2D background) or if it passes the Z test against the previously stored Z value.

After this pass, the back buffer contains mix of color data of the 2D background (in the area of the screen, where no polygons are located) and Z data of the 3D scene. ViRGE now needs to process the whole frame again. It again starts with calculating Z value for each pixel. If and only if the Z value is equal to the Z value previously stored in the back buffer (in the first pass), ViRGE proceeds to calculate the final color of that pixel and overwrites the value in the back buffer with the color data. Once the second pass is complete, the back buffer contains only color data and it is ready to be flipped into front (color) buffer and sent to the screen. Alpha-blending is not available with MUX buffering (writing semi-transparent pixels would need both color and Z values of the previous pixel stored in video memory).

You might have noticed that using one bit for telling the chip if the data is Z or color value means that the color and depth precision is decreased to 15 bits per pixel. That is true. I am not sure about the Z-buffer, but colors look the same with and without MUX buffering. Given the heavy dithering in high-color modes on ViRGE, I assume that 15 bits per pixel are used for color data by default (thus, each color component is stored in 5 bits).

I am among those who somehow like the high-color dithering on ViRGE (way more than dithering on ATI cards) and it is a part of my 1990s gaming experience. The low internal color precision leads to interesting (but annoying) alpha-blending artifacts, where alpha-masked polygons have dithering even on pixels that should be fully transparent.

Tire smoke behind the vehicles shows ugliness of the ordered grid dithering artifacts if too many alpha-blended polygons are drawn over each other (Rollcage, 1999)

It is little known that ViRGE (unlike many other chips of the era) supports 24-bit true-color precision (RGB888) in 3D. Using it in true-color modes allows you to avoid the typical dithering artifacts and enjoy better picture quality. Interestingly enough, the true-color precision does not lead to significant performance loss – the framerate is just 7% lower than in high-color modes. This sounds strange at first, but it makes sense. ViRGE is very inefficient in memory accesses during texturing as it needs to jump in the memory a lot and it always reads just small bits of data. The memory chips spend most of the time accessing the first word from a new location, but all subsequent words are typically read with the speed of one word per chip cycle. Also, the required bandwidth is not doubled as only the color buffer is larger. Z-Buffer is always 15/16-bit and texture color formats are independent on front/back buffer color depth.

OpenGL support (Windows 9x)

Although S3 mentioned OpenGL in the list of supported APIs, they did not create any OpenGL driver for ViRGE chips (a common practice back then). However, this doesn’t mean that you cannot run OpenGL programs on your ViRGE. There are three meaningful ways to accelerate OpenGL games/programs:

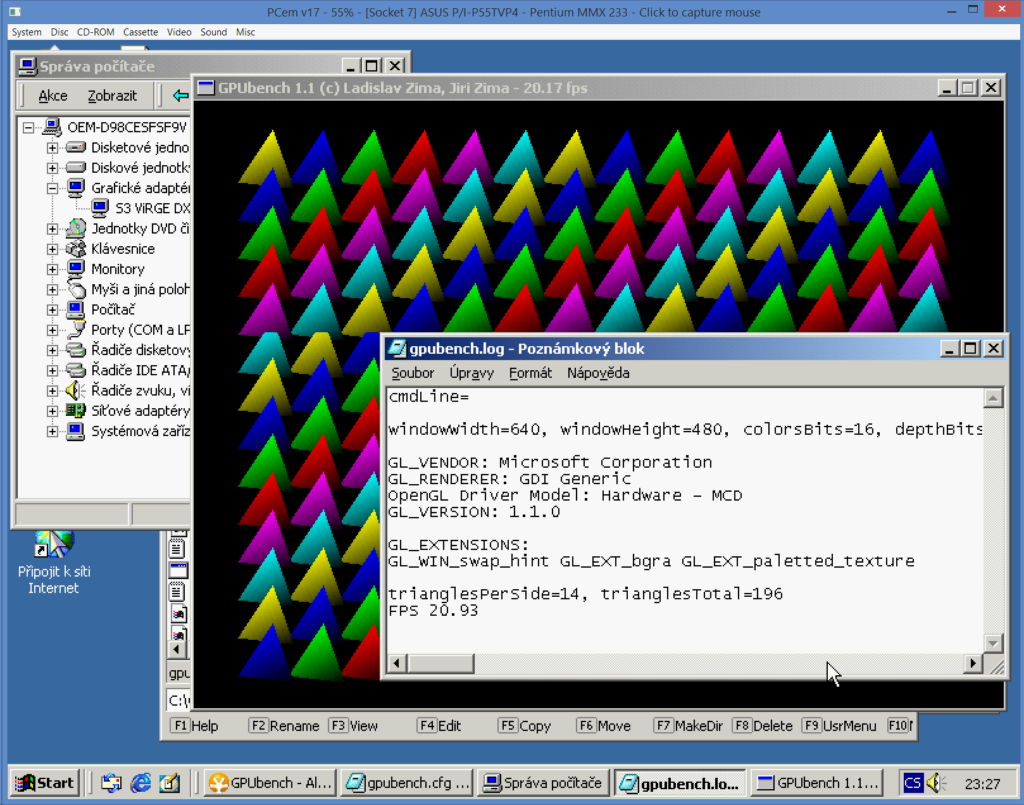

- GLQuake-based games: S3 created a wrapper that translates OpenGL API calls used in GLQuake to Direct3D (DX5/DX6) calls. The wrapper is distributed in a form of an OpenGL32.dll file that needs to be put in the game directory. For the graphics driver, the game then acts like any other Direct3D game. Quake is extremely demanding on texturing performance, because it has high-resolution textures and draws every polygon twice (the first pass contains a material texture, the second pass adds a shadow texture using alpha-blending). This is too much for the poor ViRGE, so even the fastest ones (100-MHz GX2) can render just 20 fps in 512×384 with bilinear-filtered textures.

- Quake II-based games: Techland created another OpenGL wrapper for their Q2-based game called Crime Cities (but it works on Quake II and other games too). It translates OpenGL API calls to the ViRGE’s native S3D calls, so it can be more efficient than the one from S3. Again, the wrapper implements only the features used by the Quake II engine (so it does not work with every program/game). With tweaked details, the game is playable in 400×300 on the fastest ViRGE cards. This is the OpenGL wrapper that you should always try first – it is usually faster than other options.

- Mesa3D: Brian Paul (the author of Mesa3D OpenGL libraries) created a Windows 9x OpenGL32.dll driver for S3 ViRGE cards. It’s a full OpenGL API implementation, so it works even with games not based on Quake engines (but it is slower in Quake I/II than the previous options). This is what we used to measure ViRGE’s OpenGL performance. Sadly, the driver works only in full-screen and windowed OpenGL programs are drawn incorrectly.

OpenGL support (Windows 2000)

There is in fact one operating system that has support for OpenGL hardware acceleration on any ViRGE card and the driver is even bundled with the operating system. Microsoft used the S3 ViRGE driver as an example of a full-featured video driver (with Direct3D and OpenGL MCD support) in its Windows 2000 DDK (Driver Development Kit) and included it also with the final release of the system. The DDK contains the whole source code of the driver (excluding the mini-port part), so any hardware vendor can see how the features are implemented.

You can modify the driver and compile it by yourself. You just need the Windows 2000 DDK and Visual C++ 6.0 (SP2).

The driver location: X:\NTDDK\src\video\displays\s3virge\

To build the driver (s3mvirge.dll), just set build options for free/retail OS, go into its directory and use “nmake” and “build -cZ”. There are also a few other caveats before you get a fully working driver in a distributable form. For example, there is no .inf file, so you need to create a “skeleton” of it using a supplied tool. The easier way is to combine the new dll file with any existing ViRGE driver.

The OpenGL MCD driver works surprisingly well. It allows windowed rendering with multiple overlapping OpenGL windows and it is robust enough to be used with software packages like 3D Studio MAX or LightWave3D (from that era). Only the polygons with certain unsupported blending features are rendered using CPU and then sent to framebuffer memory (these polygons are drawn slower but without any graphics glitches).

The driver uses OpenGL hardware acceleration only if the desktop color depth is set to 16-bit (high-color). I found only one issue – the driver switches to the software-only mode after any color depth or resolution change happens and you need to restart the system to get the hardware acceleration back.

It’s a shame that S3 was not able to provide such a driver with Windows NT 4.0. I still remember all those on-line discussions with people looking for an affordable OpenGL accelerator. In the first quarter of 1997, you could buy a Matrox Millennium (1) card that supported OpenGL MCD but could not accelerate textures nor transparency effects. The other option was 3Dlabs Permedia (1), which had a complete OpenGL ICD driver (for both Windows 9x and NT) and could accelerate filtered textures, alpha and other goodies, but it was very slow (except for the filtered textures, it has similar or lower performance than a high-clocked ViRGE DX/GX). Its dithering artifacts on alpha-blended polygons were worse than what ViRGE produced and the bilinear texture filtering was one of the worst you could see. ViRGE has never been a good choice for GLQuake but it could have been a good cheap speed-up for 3D modeling programs in the early days of 3D acceleration.

Performance-wise, the Microsoft’s MCD driver is worse than the Brian Paul’s ICD driver for Windows 9x. Simple smooth-shaded triangles are drawn with the speed similar to alpha-blended ones (so the speed is halved). I assume that the MCD driver does not have that good memory management and large textures are sometimes drawn using CPU. Even with the textured polygons that are drawn by the ViRGE, the speed is 25-30% lower compared to the ICD. The high driver overhead causes the system to handle only about 30 thousand draw calls with MCD (compared to 140 thousand draw calls with ICD).

I tried GLQuake “timedemo” with STB Nitro 3D/GX (S3 Virge GX, 75MHz, 2MB EDO RAM) and these are the results for the MCD driver compared to S3’s Direct3D wrapper:

- MCD in Windows 2000: 8.6 fps (point sampling) / 8.0 fps (bilinear filtering)

- S3 Direct3D DX5 wrapper for GLQuake in Windows 98: 14,8 fps (point sampling) / 11.3 fps (bilinear filtering)

I assume that Microsoft did not spend much time optimizing the driver and preferred it to be easy to read for other programmers.

Very impressive article! Well done! Have you looked at the S3 Trio 3D and 2X cards also? To the best of my understanding they are also based on the Virge but offer some real improvements beyond being AGP, there are two distinct variants as I remember. Anyway thank you for a very comprehensive look at these mostly ill reported cards. As you say, they are taken “out of time” by current reviewers. As a note, I use a Number nine reality 332 Virge 325 in my old DOS/Win 3.1 computer. It has a fantastic clean output quality and seems to be one of the best of the first Virge cards available.

Thanks! Yes, these are great 2D/DOS cards. I use my STB Nitro 3D/GX for things like 640×400@120Hz in DOS/Build engine games. The output quality is superb on these. I had troubles only with my GX2, but I believe that it just needed to replace caps (and the trick with changing the black level voltage using its registers).

I was in fact thinking about including the Trio3D/2X cards. I even have one and tested it in multiple games. However, it was not faster than the ViRGE GX2 in any game I tried and did not have the S3D support, so I was not able to run my OpenGL tests on it (to precisely measure advantages over previous 3D cores). From my point of view, all the ViRGE cards had some real advantages when released – even the GX2 was usable for low-end 3D gaming and was pretty good in multimedia. On the other side, the Trio3D was all about the low price.

Hi Cyrix6x86 n SWARM,

I have to again appreciate the SUPERB site and the best review about s3 I ever read!!! You are talking about TRIO3D/2X so I have a little reference for you. I have tested AGP verision, lack of drivers…. Of course I mean, original drivers are problem for GL or for DirectX support //it supports, but many mistakes-errors//. With original driver you will have only few games to run, but look for ENGINEERING RELEASE driver!!

RESULT on ENGINEERING RELEASE drive for TRIO 3D/2X AGP 4MB and more

– support DIRECTX games such: Incoming, GTA2, MK4 /to translucent textures/, NO ISTAKES on BENCHMARK 99!!!; Rogue Squadron; DUNE2000 etc… I think DX5 n DX6 is good support, but sometimes have a little errors as on MK4… Viper RACING is HORRIBLE due to all tested drivers… All above are without errors and fully playable exceptor spoken MK4 or ViperRacing

-GL Support, tested

Quake 1 – 100% beautifull on TRIO 400×300 = 13-18 fps

Quake 2 – 320×240 100% speed //up to 25fps//, 400×300 //8-15 fps//

-S3D Support for AGP TRIO 3D/2X

HELBENDER, but I think it dont uses S3D but DX, this game was produced by MS…

Compare GX2 vs AGP TRIO on VESA

– SWARM are fully true, GX si FASTER, tested VESA: all benchmarks by philscompLABS, result are 100% vs 99,9%, so 0,1% slower is TRIO

Compare GX2 vs AGP Trio on GL:

– GX2 Quake si more faster than on TRIO + Quake 2 same //both have little glitches due AGP slot on Q2

– GTA2 is more faster on TRIO and have 100% translucent fires /textures are without mistakes on trio//

– Viper Racing – disgusting on TRIO, more beauty on GX2 //also translucent textures on GX2, but all textures miss z buffer on GX2 and on Trio…. on GX2 is beauty playable 512×384 = 16-20fps, trio is slower n disgusting – unplayable

– MARK 99 – GX2 errors on graphics, slower than TRIO, TRIO have 100% textures n effects, on rocket racing equals 4 Fps, but no mistakes on graphics…

//this comarations was on Duron 600, cause AGP slot…

//the best is NITRO PCI due PCI slot and -10% speed lost vs GX2 //gx2 is the fastest card at EDO RAM ever!!! GX2 miss compatibility with S3D for DESCENT2… another S3D I have no problem… At DOS GX2 have errors on Terminal VELOCITY, Screamer 1 go SUPERB….

//on GX1 NITRO only one title i have problem: TIME WARRIORS, tested on VX + Virge first release + GX + DX + GX2, I thing no VIRGE supported except DIAMOND…

//PCI virge was tested on P1 75+90+100+133+166MMX+200MMX, EDO RAM

Good LUCK!!

Thank you for all the findings! I will my Trio3D in a testing computer and will look at the engineering release of the drivers. This sounds interesting.

Amazing article. It is good to see those articles. When you read S3, you only read “it is crap” but the card until 3dfx, it was a good option if you have little money.

Anyway, you forget one thing: the bad manufacturers. They did so much damage to S3. I think they were the responsible for the bad fame of those cards.

You are right. Bad manufacturers caused a lot of harm not only to S3. Even though they used the same chips, a cheapo sub-45MHz Virge DX/GX was almost 50% slower than a good 74MHz Virge DX/GX (or even more than 50% if the fast one was EDO RAM and the slow one was SDRAM). Thankfully, Virge required to work with 64-bit data bus. Cheap OEM SiS 6326 cards were often crippled to just 32-bit data bus by using only half of the memory chips on the PCB. 32-bit data bus was a problem also with certain S3 Savage cards. This explains why the experience with the low-end cards varied so much among users.

Exactly. Im planning to upgrade memory chips from 50MHz to 73MHz and reflashig BIOS to overclock it to 83MHz.

They were called decelerators mostly because they were bundled with computers for too long. ViRGE was showing its age in late 97, but budget computer integrators would still sell them as the low-cost option in 98 and 99.

Here’s an issue of PC Magazine from 1997-12-02: https://books.google.com/books?id=uaTgf95ualMC&pg=PA261 . There’s only one ViRGE and its 3D performance is already severely lagging behind the new 3D chips.

I just found a budget computer test in a Polish magazine from April 1999 and there were still configuration with a ViRGE. It was already obsolete in 98.

I still like the ViRGE for a Pentium + Voodoo PC. It has great DOS compatibility, is a fast GDI card and is great for video with it’s color space conversion and scaling.

Yes, that’s true. The new 3D accelerators that came in the middle of 1997 were all much better. S3 knew that (working already on Savage series cards) and even ViRGE GX2 was already marketed as TV/multimedia solution (and not 3D).

ViRGE was on the market for very long. On the other side, aside 3D, it was a good chip. I think people like my father never used any 3D feature of his computer until Windows Vista found its way to his computer and had 3D accelerated desktop compositor. For such people, ViRGE was more than enough for a long time even though its 3D was already a joke.

I too like the combination of ViRGE+Voodoo. Exactly my current Win9x setup.

I love everybody, who care about this topic, everybody, who effort to clear reputation. I am only common human, I like retro, I have 3Dfx + S3 Savage + S3 Trio + S3 ProSavege + S3 Virge //and another cards for comarison// together 21 retro PCs. I have many own fact and these your lines is a caressing the heart, I LOVE THESE LINES. It is easy to say at common comunications our feeling about S3 chips or 3Dfx, but so much harder is do these tests, BE A GENIUS LIKE YOU to be able to certain say these facts you have scribed here! HUT DOWN!! I allways scope, what does means to own 3Dfx nowaday VS at the time when card was released. //same with S3 or SiS chips etc// And this is my LOVE topic and you HIT the BLACK TARGET with these lines!!! What I want to tell you is HUT DOWN, please NEVER STOP doing this favour to world, It is very beautiful good deed by you!! This is my praise for you, it is not neccessary to reply this message, I want to be sure, you get any prise for GREAT JOB you have done or you still do! Good LUCK, NEVER STOP, I SEE ALL YOU DOING… This is greeting from Slovakia for YOU. //site is shared via all friends loving RETRO

AGAIN: YOU ARE GENIUS!!!!

excellent article, but is it true what is mentioned here:

https://www.gamedeveloper.com/programming/an-opengl-update-for-game-developers

that “SGI’s DDK is also an ICD, but comes with a sample driver (for Virge/GX) as an example”. I really cannot find any information about that SGI DDK…

allegedly, “Microsoft 3D Device Driver Kit” that was released 1998:

https://web.archive.org/web/20000903193455/http://www.microsoft.com/HWDEV/video/3Ddrv.htm

contains OpenGL ICD driver for S3 Virge – it was not free like their usual DDK, but costs USD 5000 and that’s why probably no regular people have it.

Essentially, it was SGI DDK and MS only distributed it:

“The new kit was developed by SGI and Microsoft under the Fahrenheit graphics partnership initiative announced in December 1997”

as it was announced on SGI website in 1997:

https://web.archive.org/web/19980203203624/http://www.sgi.com/Headlines/1997/December/ddk_release.html