workstation

Playing with TIGA #1

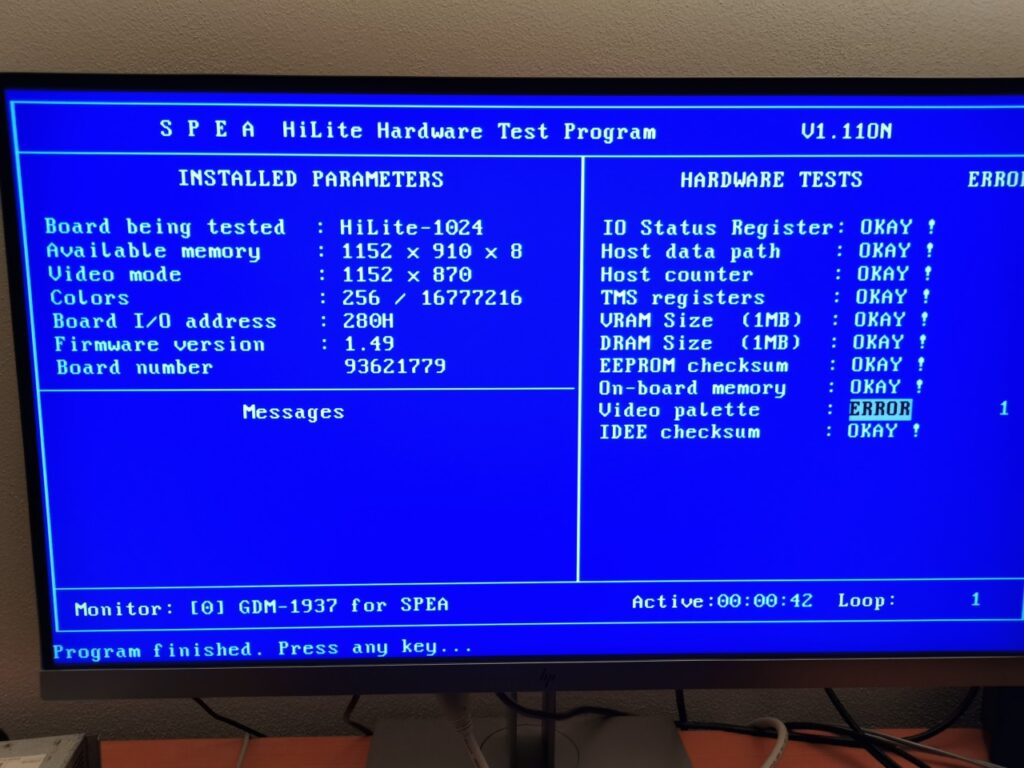

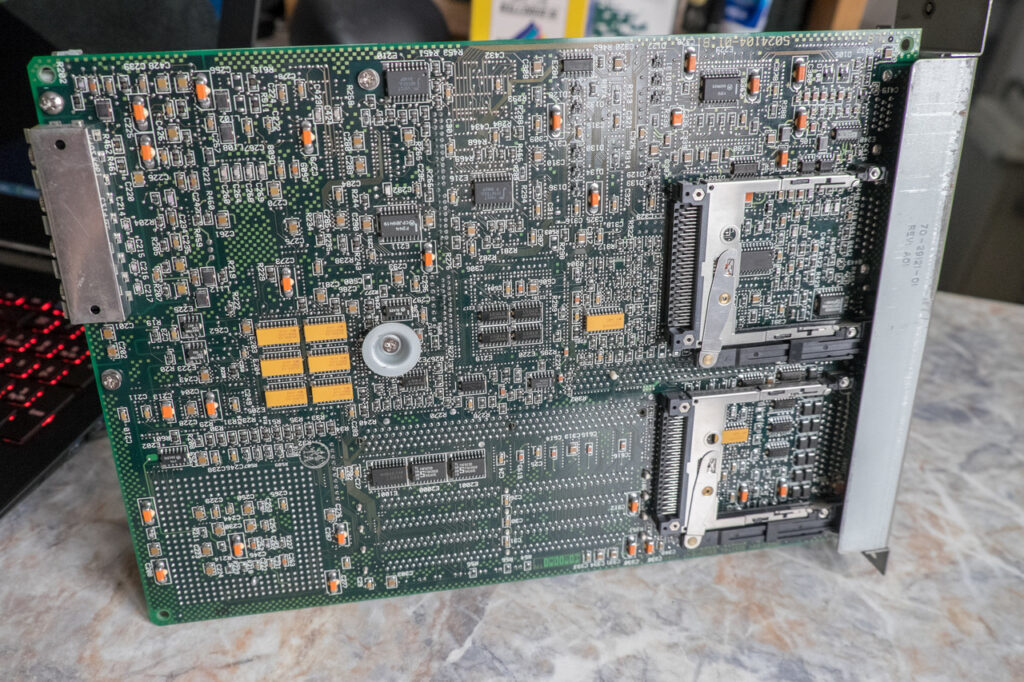

This is my new project for next few months – SPEA Graphiti HiLite 1024 – a TI TMS34020-based professional CAD card (“TIGA”). The video output does not work properly as some traces between the GPU and RAMDAC are bad, but the rest of the card seems to be ok. Once I fix it, I would like to play with the chip and program some benchmarks to see the real performance. It looks like TIGA cards are valuable among collectors, but there is very little info about what can be done with them. TMS340x0 chips are fully programable 32-bit integer CPUs and this (rather low-end) card has 1MB of program/data memory (in addition to 1MB of framebuffer memory). It is like a complete computer on a card.

These chips were used in the graphics subsystem of Sun 386i UNIX workstation and some CRT terminals (like DEC VT1000). There were even Amiga Zorro cards with these chips (boosted with TI floating point co-processors), but presumably the concept was too complicated at the time when most people cared just about BitBlt and basic acceleration of line drawing.

(fortunately, my Siemens Nixdorf PCD-4Lsx PC is just big enough to accommodate one full-size ISA AT card… the card is very picky and refuses to work on Pentium systems or anything with ISA clock beyond ~8MHz)

Preparing my SGI Indy for a vintage computer event

Bytefest 2019 is coming and I have only two weeks to prepare all the machines I want to take with me. I want to the show this Indy with a Nintendo 64 game console because Indys were often used for N64 game development (after all, N64 hardware was designed by SGI). It is nice to see that Indy’s VINO interface supports progressive scanning (used by game consoles and old 8bit computers) on its composite/S-Video inputs – unlike newer SGI O2 and SGI Visual Workstation 320. Anyway, the main planned part is to connect a vintage Czechoslovakia plotter (Aritma Minigraf) using our custom interface (modified to use a serial port) and plot processed images of visitors taken using the Indy’s bundled webcam.

I’m surprised that serial ports on Indy support speeds only up to 38.4 kb/s. Pretty slow for a computer introduced in 1993. Maybe that’s one of the reasons why the serial port speed was not even mentioned in the user guide. They just didn’t care.

Dead DEC Multia… any ideas?

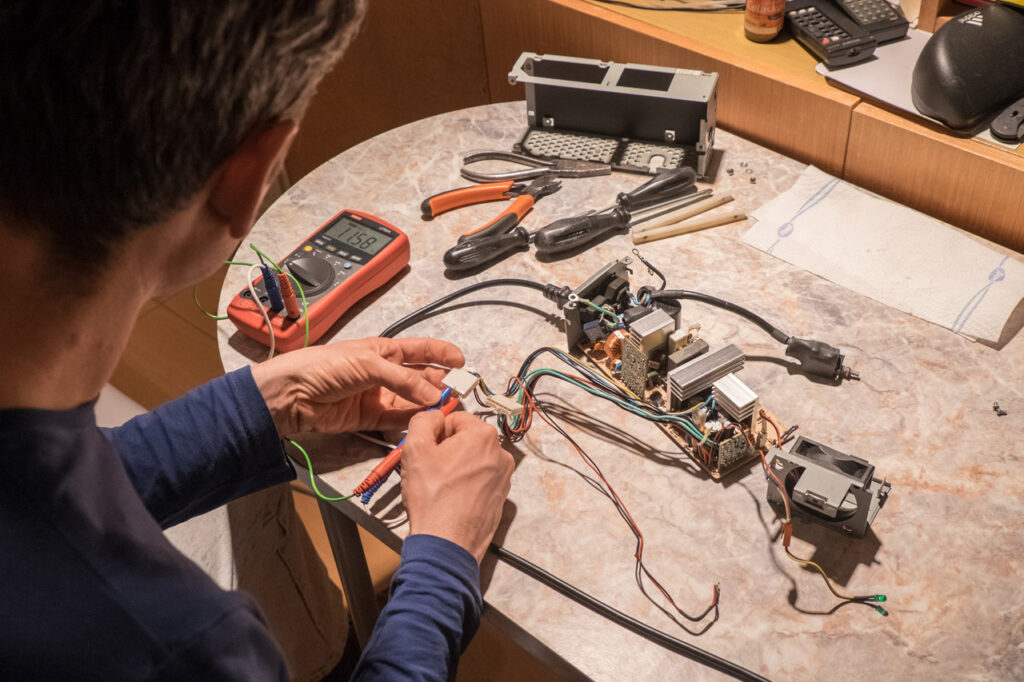

I was given a Multia a few months ago from a former Digital employee. He told me that the machine could not start (no sign of life, even fan didn’t spin on). I cleaned it, checked all cables and the machine started without any issue. However, after an hour of work, screen went black and the machine was not able to boot anymore (no smoke effects).

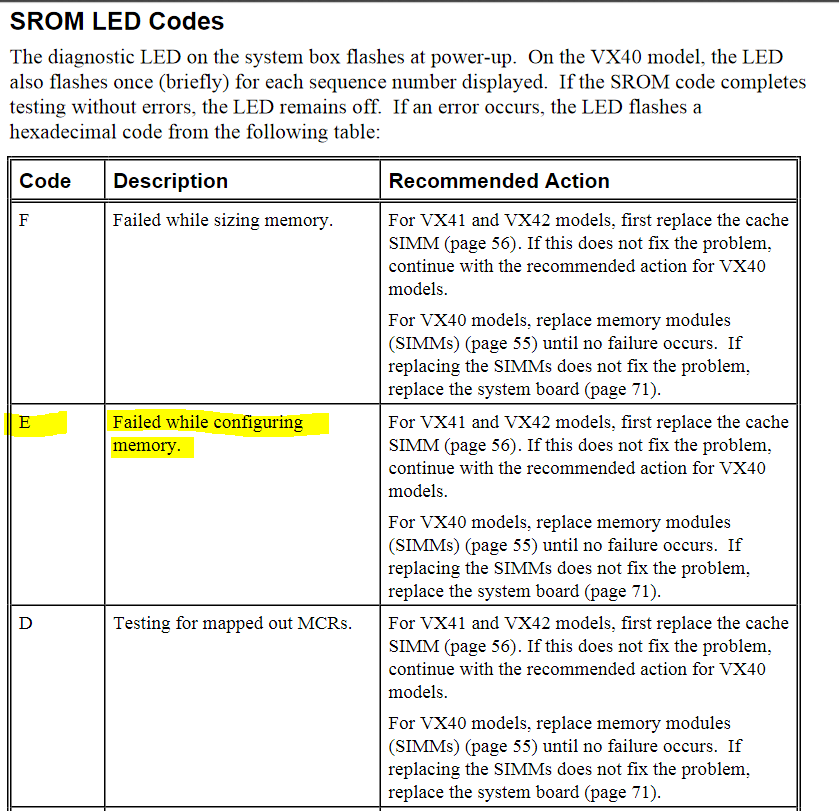

I thought this was maybe the well-known issue with the two chips on the bottom side of the system board dying due to overheating. Ordering these chips looked easier than doing any diagnostic so we ordered the replacement and “fixed” the board. However, it didn’t help. My Multia still blinks the error code E – “Failed while configuring memory”.

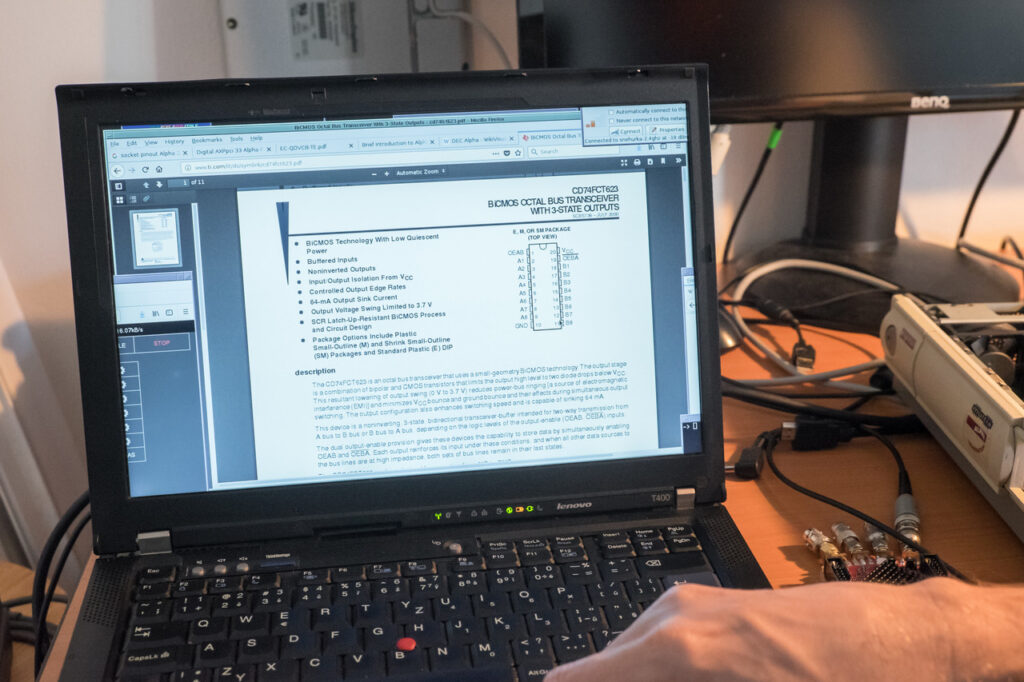

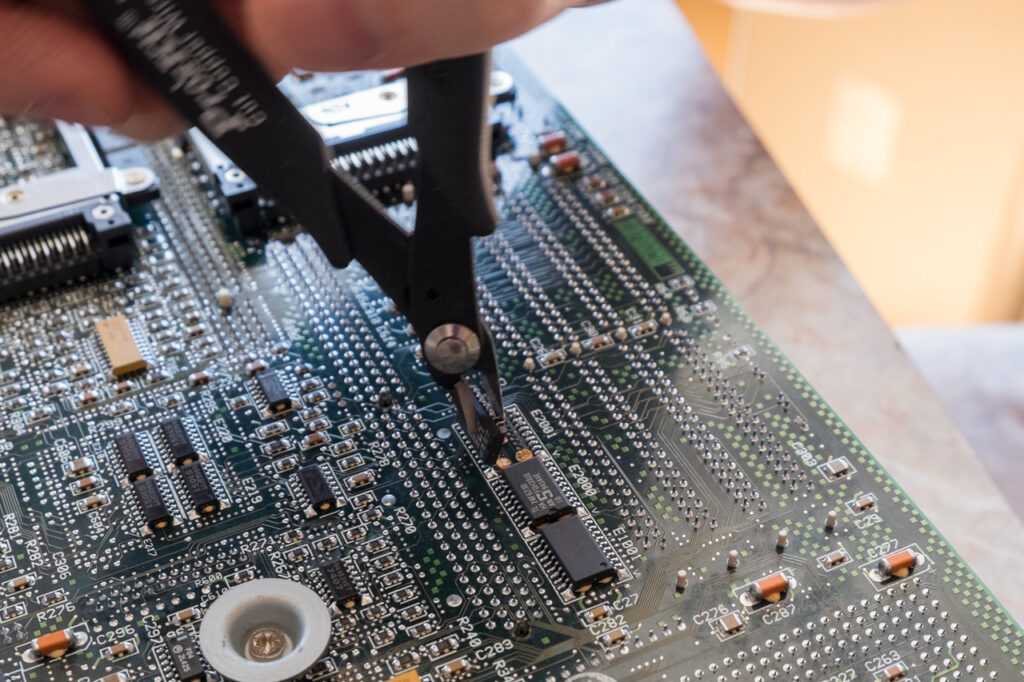

These chips are octal bus transceivers – they are between the CPU and RAM slots. There are nine of them (8x8bit for data, 1x8bit for ECC). Two of them on the bottom side. We did some checks using oscilloscope to see what was happening there. At least OEAB signal was changing rapidly. !OEBA seemed H all the time (cannot tell for sure, maybe there were just too few changes). There was some data on two out of the nine chips. The rest of transceivers had no visible data receiving from the CPU (L).

We don’t have a usable logic analyzer at the moment so it is hard to move further. I tried to find some documentation and block diagrams of the machine with no success (I have a reference board design for the CPU though). Also, all Multia pages just mention that there are issues with the two transceivers that we already replaced… but there is no further explanation how the machine behaves if these are faulty (to check that we are on the right way).

Any ideas what to do next? Burn it with fire?

DEC Multia Restoration #2

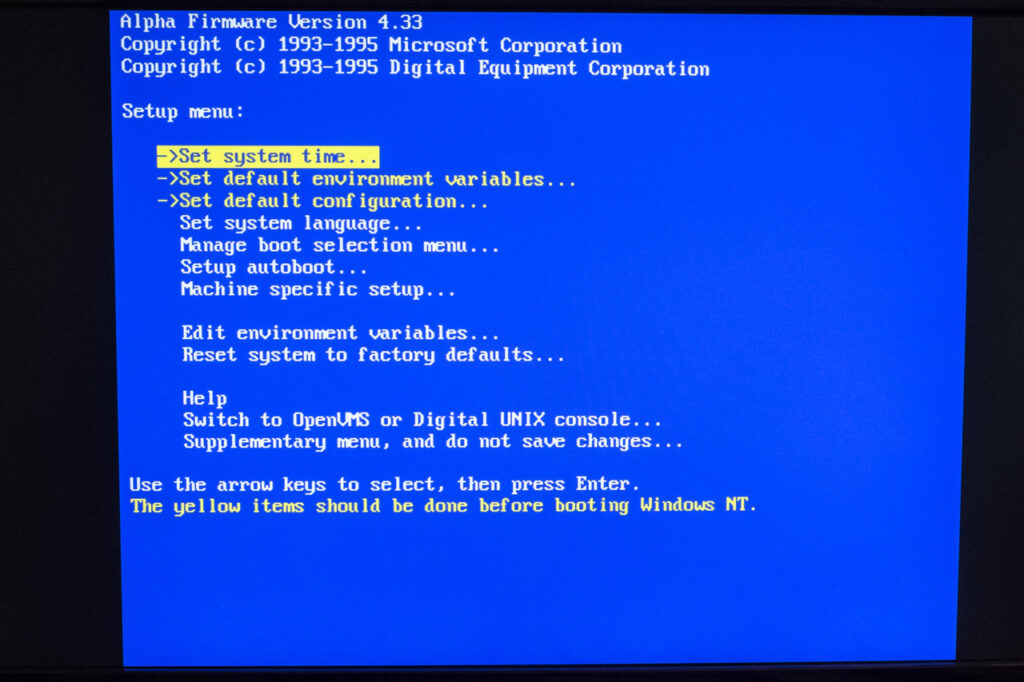

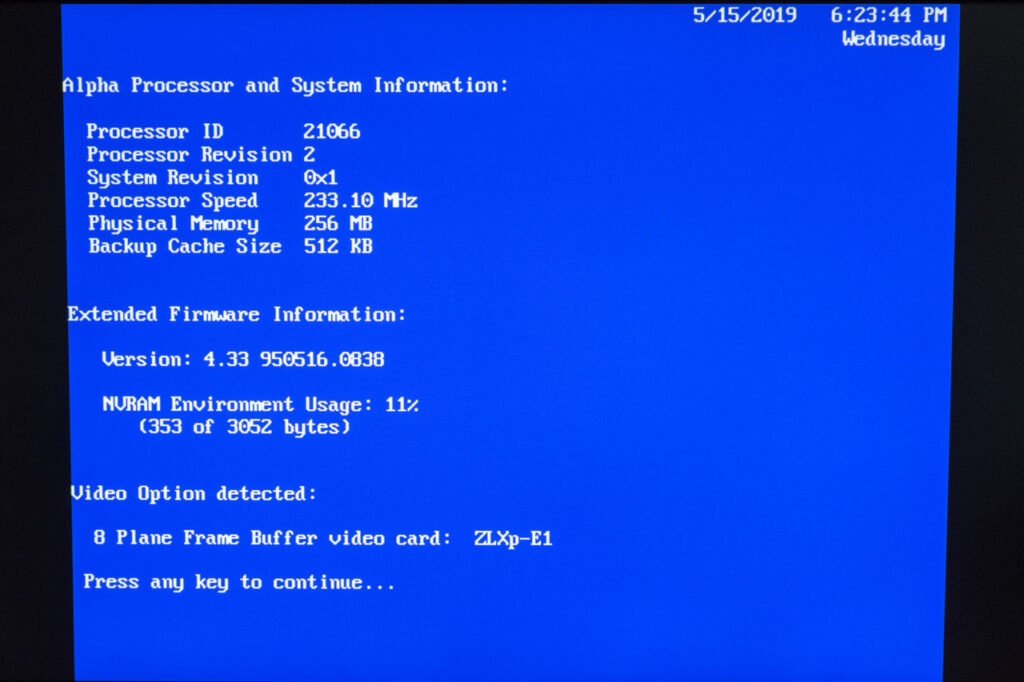

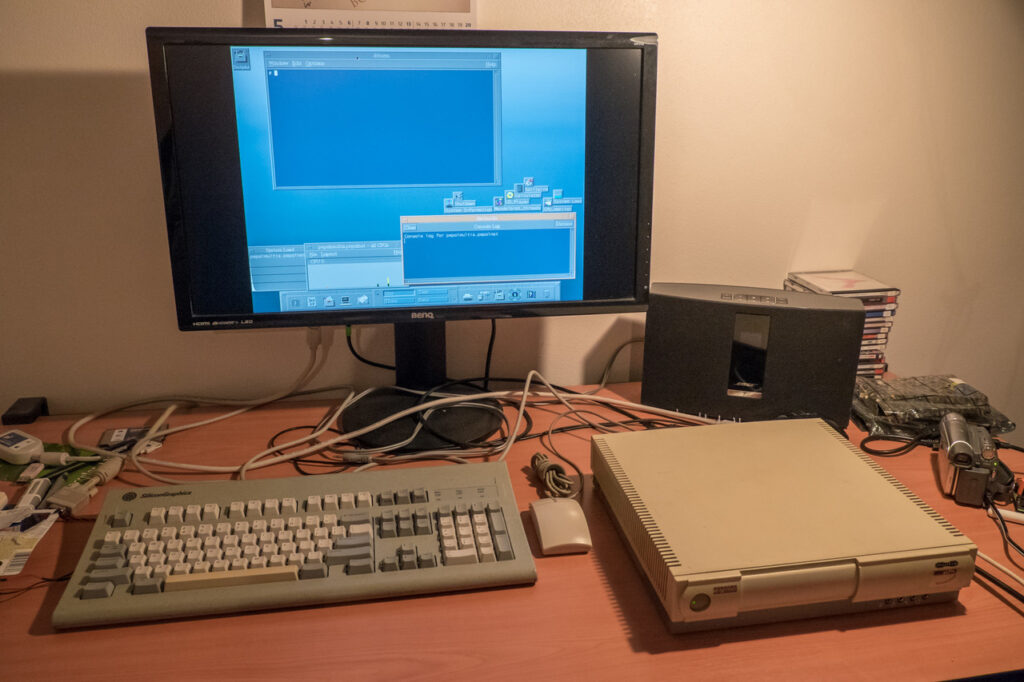

In the first part, I cleaned this little machine and convinced it to boot. Sadly, it died an hour after the first start. Anyway, you can see photos containing:

- Video card self-check (color stripes)

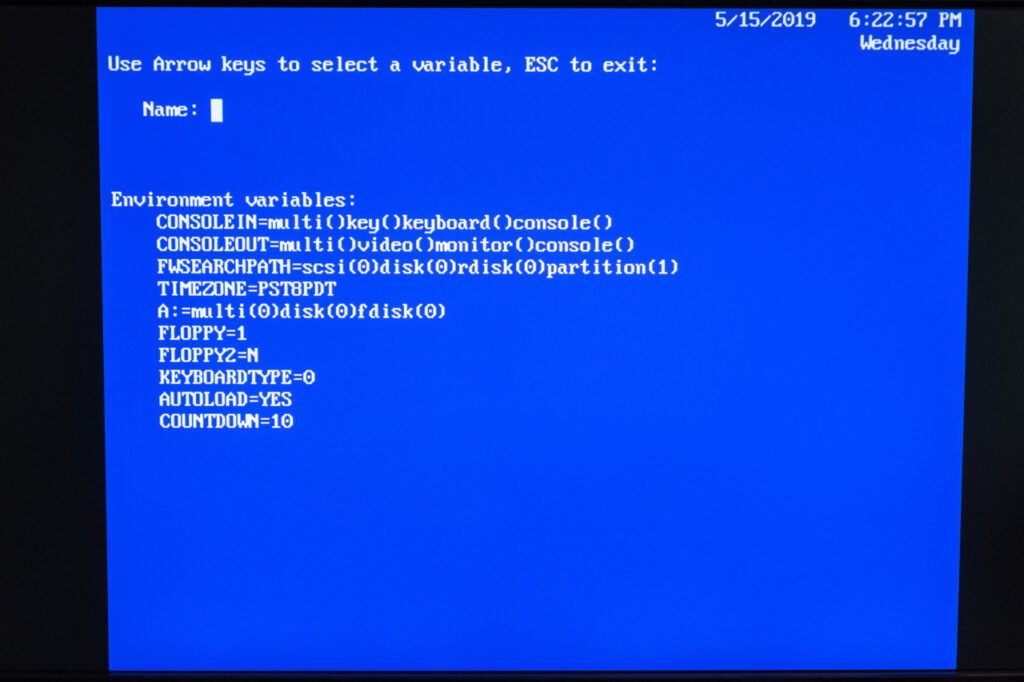

- ARC firmware for loading Windows NT (blue background)

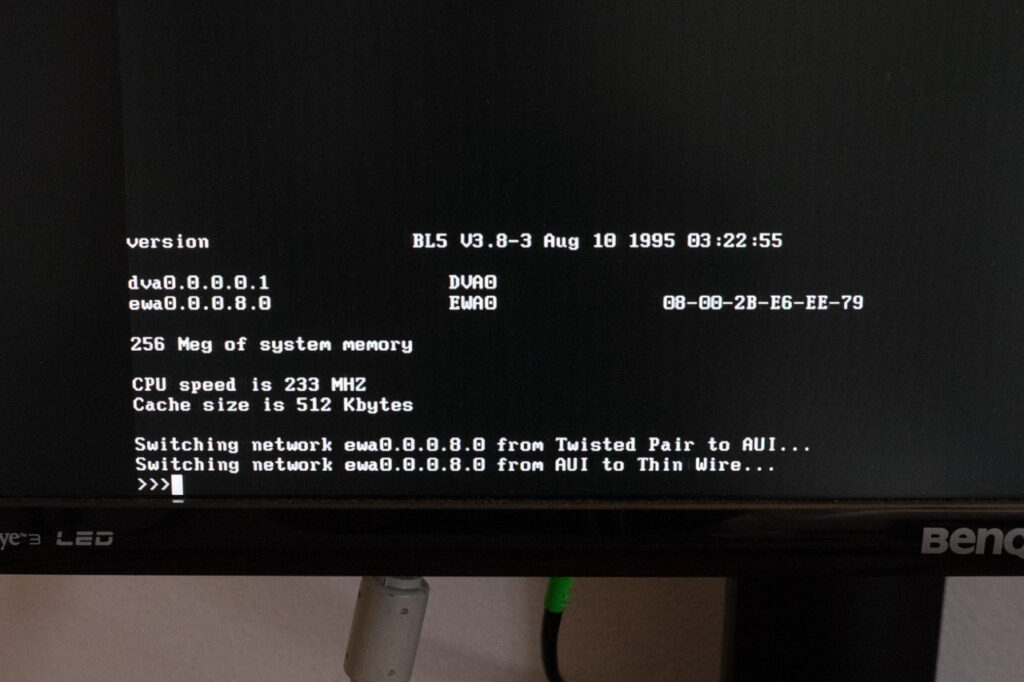

- SRM console integrated in the firmware for booting UNIX and VMS (black background) … yes, it has dual firmware

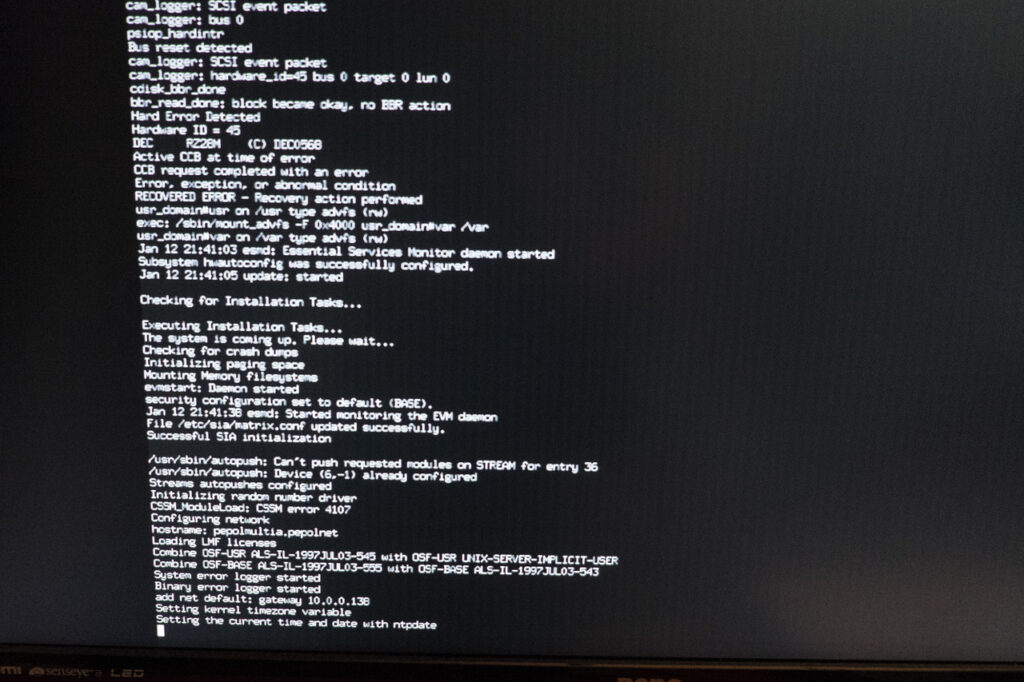

- Digital Tru64 UNIX boot

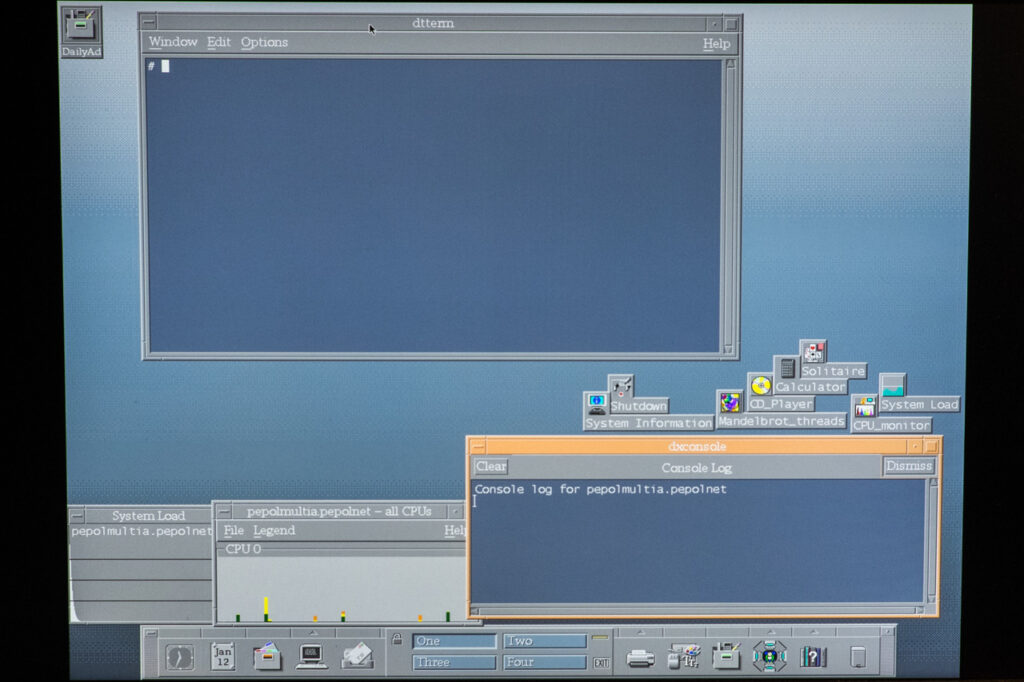

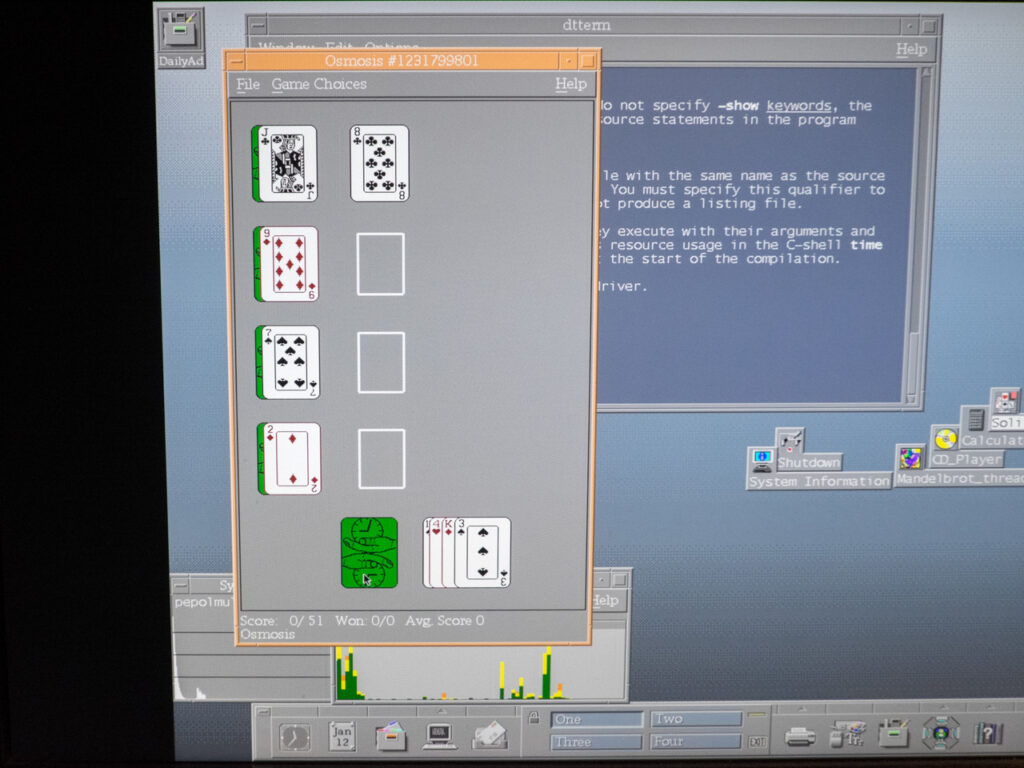

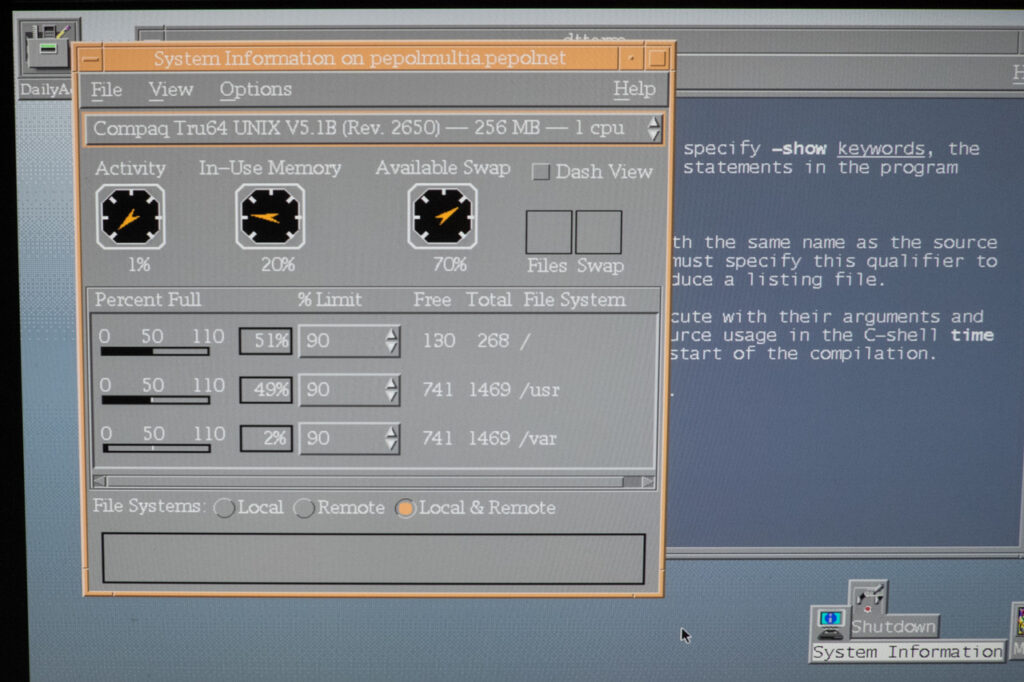

- CDE graphics environment

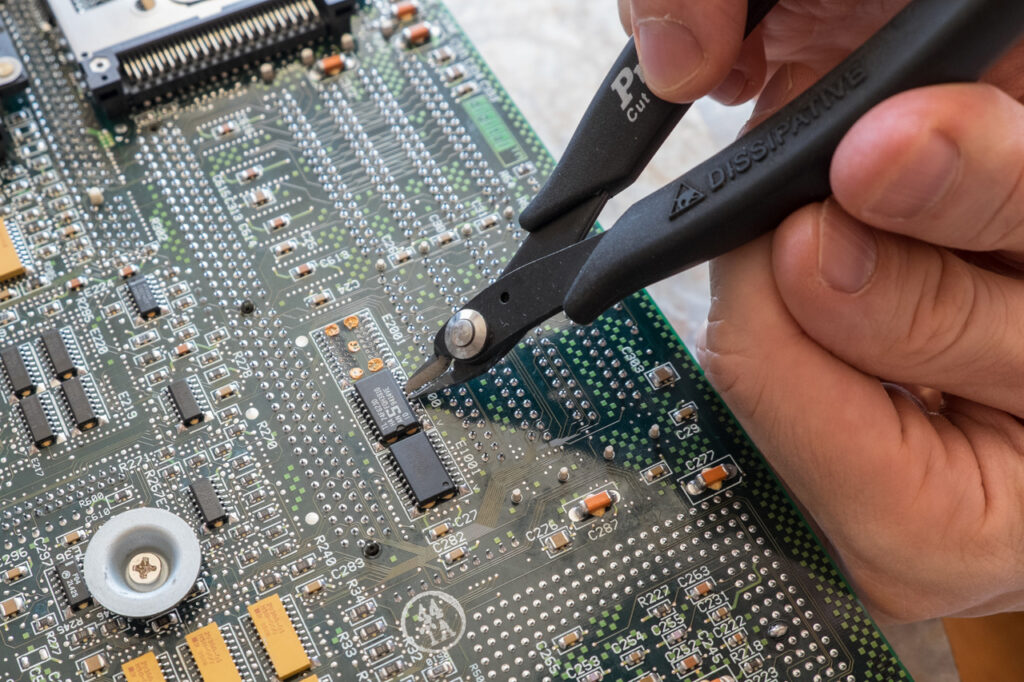

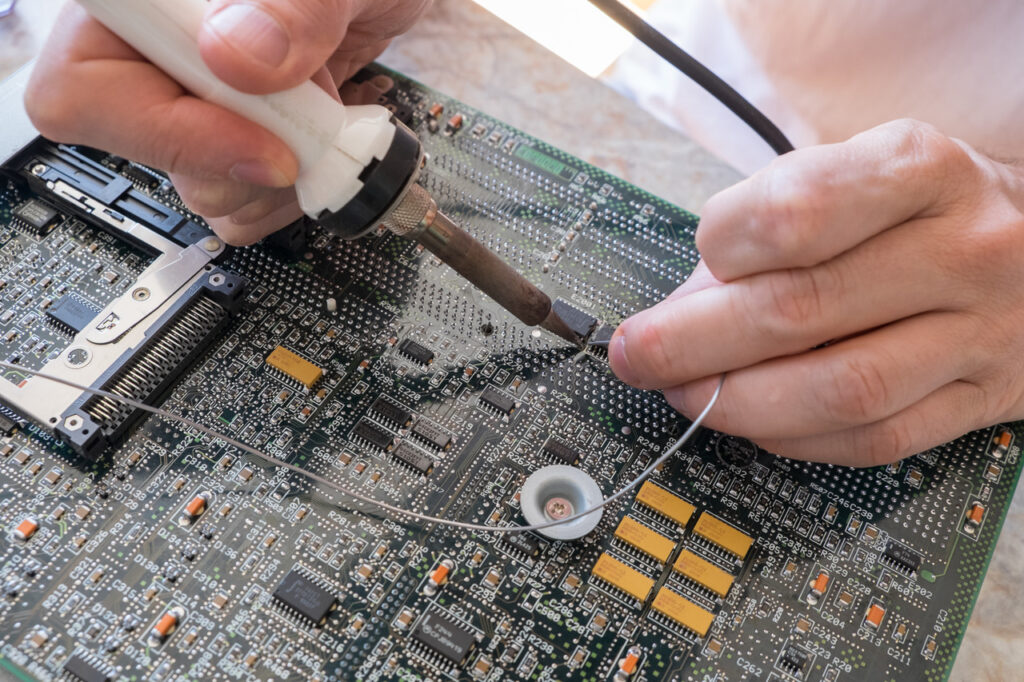

Today, I will try to replace two suspicious chips. Let’s hope that it will bring the machine back to life.

DEC Multia Restoration #1

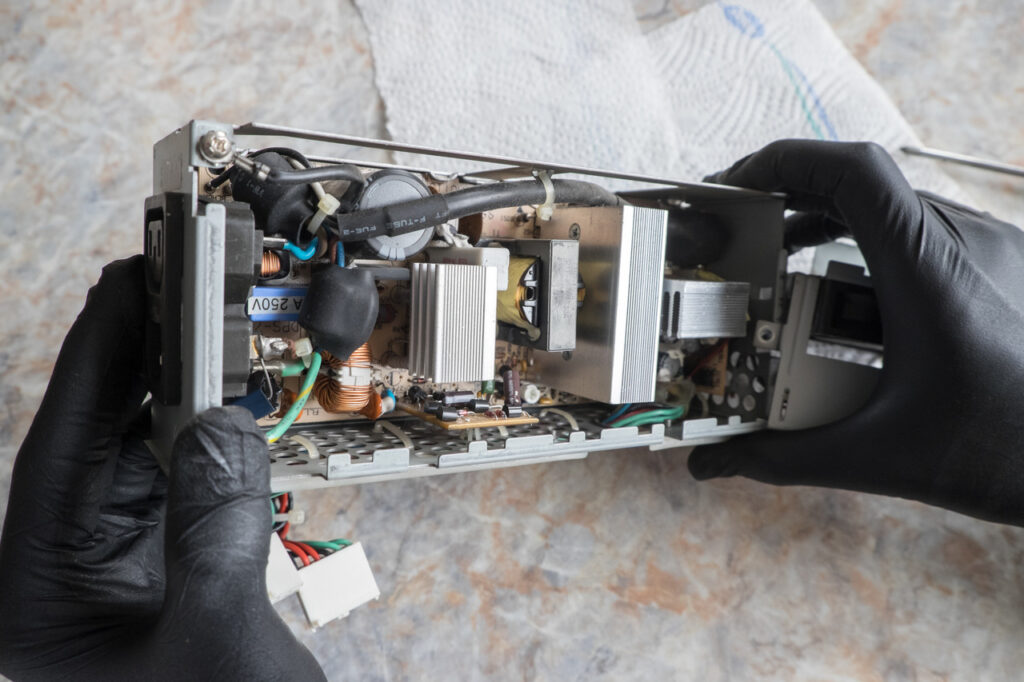

Multia (1994) was the smallest Alpha-based computer made by DEC. It was intended as a low-cost workstation but never was really successful. One of my colleagues, a former DEC employee, gave me this machine in a non-working state and – being my first and only Alpha-based system – it deserved to be fixed.

I’ve completely disassembled the whole computer and cleaned every single component inside to get rid of dust and ugly mold smell. Minor issues were found and easily fixed. There were some partially disconnected cables which probably caused that the system didn’t want to boot when was found again in storage by the original owner.

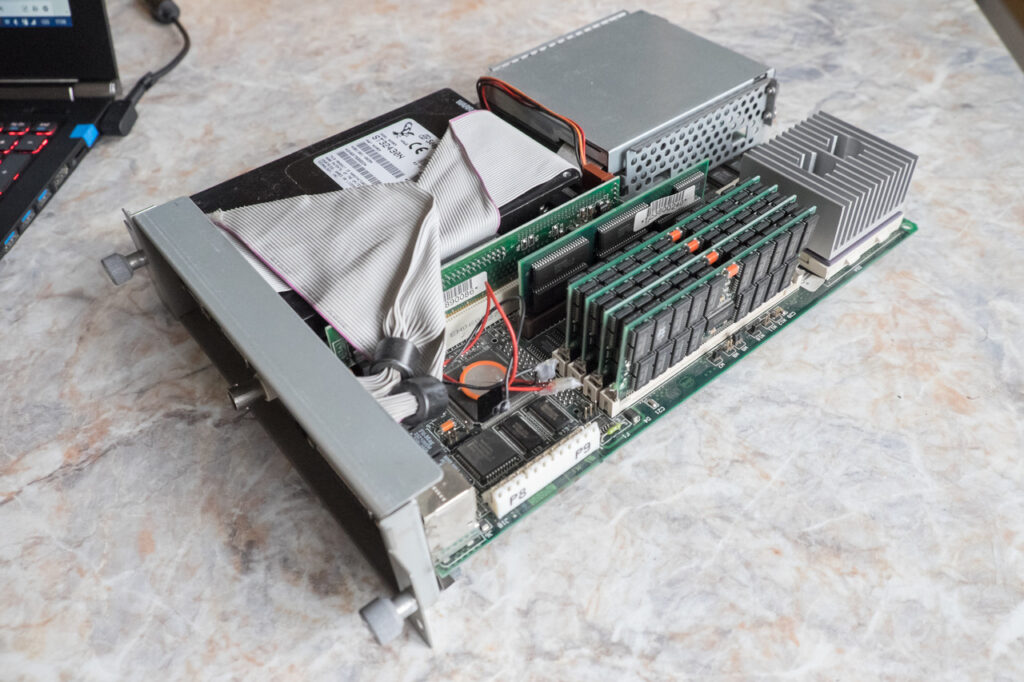

Multia was incredibly small even by the office PC standards back then. DEC managed to squeeze a 64-bit Alpha CPU, enough RAM slots, 2-MB 2D graphics accelerator, Ethernet controller, IDE interface, PCI slot and two PCMCIA slots (bottom side) on a small mainboard. The hi-end configurations (like this one) were offered with a small PCI riser containing a SCSI controller chip combined with a 3.5-inch SCSI hard drive filling the last empty space inside the case. As a result, these configurations overheated significantly.

Trancept Systems TAAC-1 (1987)

TAAC-1 is an interesting and little-known piece of history. Its creators call it the first board-level GPGPU (a programmable graphics card). This thing was designed to accelerate scientific and medical visualization. It could render 30,000 3D Gouraud-shaded and Z-buffered polygons per second. In addition to that, it could also be programmed to accelerate volumetric rendering and ray tracing. The board could even be programmed in C and allowed to do more than just graphics.

The large double-plane VME board filled three slots and a half of it was covered with memory chips. There were 8MB of frame-buffer memory and the 200bit GPU logic ran at 8MHz, producing up to 1024×1024 pixels in true color. TAAC-1 was used with Sun 3 systems based on Motorola 68020 (16.67MHz).

Trancept Systems Inc. was founded in 1986 by three people. One of them was Tim Van Hook. The same person that later worked for SGI as a Principal Engineer (the architect of Nintendo 64) and started a company called ArtX (Nintendo GameCube graphics hardware), which was acquired by ATI in 2000.

Demo video: part1, part2

More info: virhistory.com, obsolyte.com

SGI High IMPACT Graphics (1995)

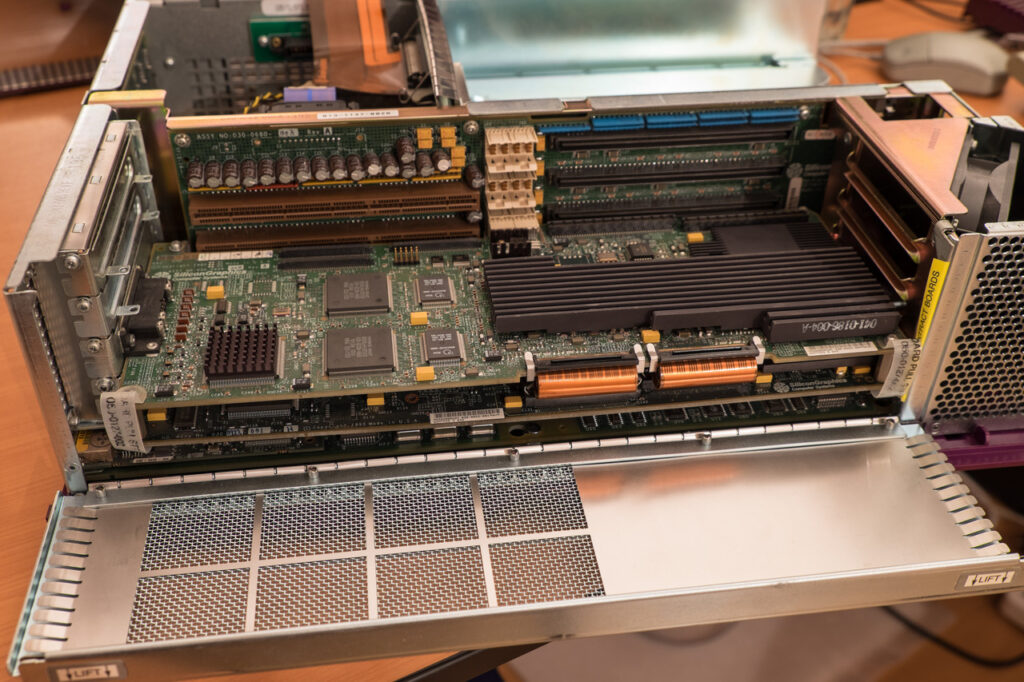

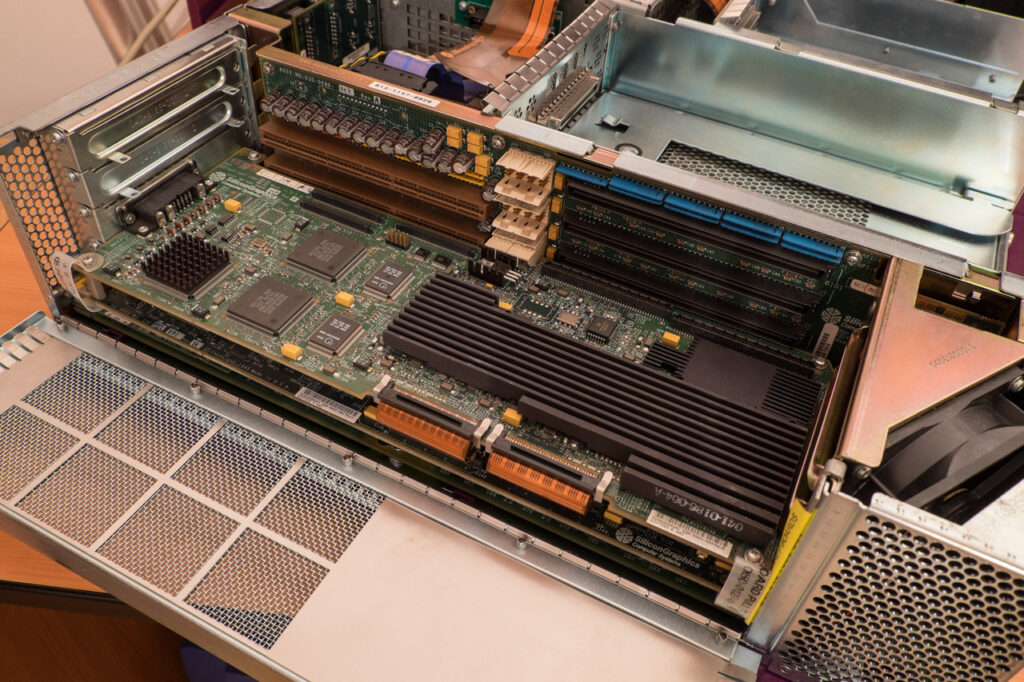

SGI Indigo2 IMPACT systems were the best workstations for game development and other activities involving textured 3D rendering in 1995. My system is equipped with High IMPACT Graphics, which is a two-card solution with a dedicated geometry engine (one million triangles/s), raster engine with two pixel processors (two pixel per cycle, 60-70 textured Mpixels/s), 12MB of pixel memory and a single texture-mapping unit with its own 1MB of texture memory.

The high-end option was called Maximum IMPACT Graphics. It took three slots in the computer and doubled the rasterisation performance by using exactly the same principle that was later used by 3Dfx Voodoo2 SLI (scan line interleaving).

The 3D performance of SGI Indigo2 IMPACT was years ahead of PCs and other workstations. In fact, 3Dfx Voodoo2, the best gaming 3D accelerator for PCs in 1998, had similar performance to High IMPACT graphics but unlike the IMPACT series, it didn’t support windowed rendering, 32-bit color precision and high resolutions.

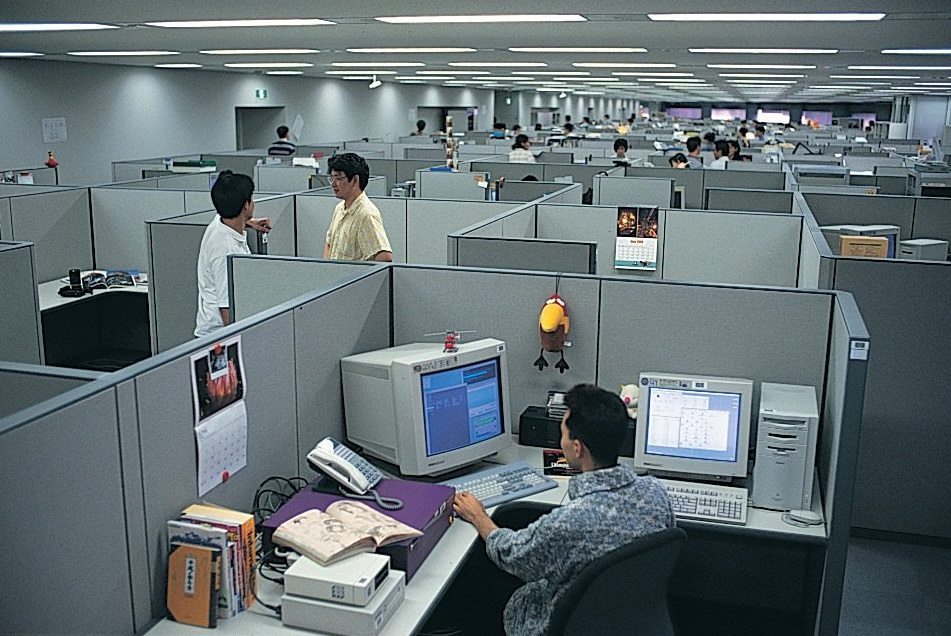

The last two photos show Indigo2 IMPACT systems during the development of Final Fantasy VII (source: Sony press kit).

GPUbench results – compare the SGI Maximum IMPACT performance with other 3D accelerators of the same era.

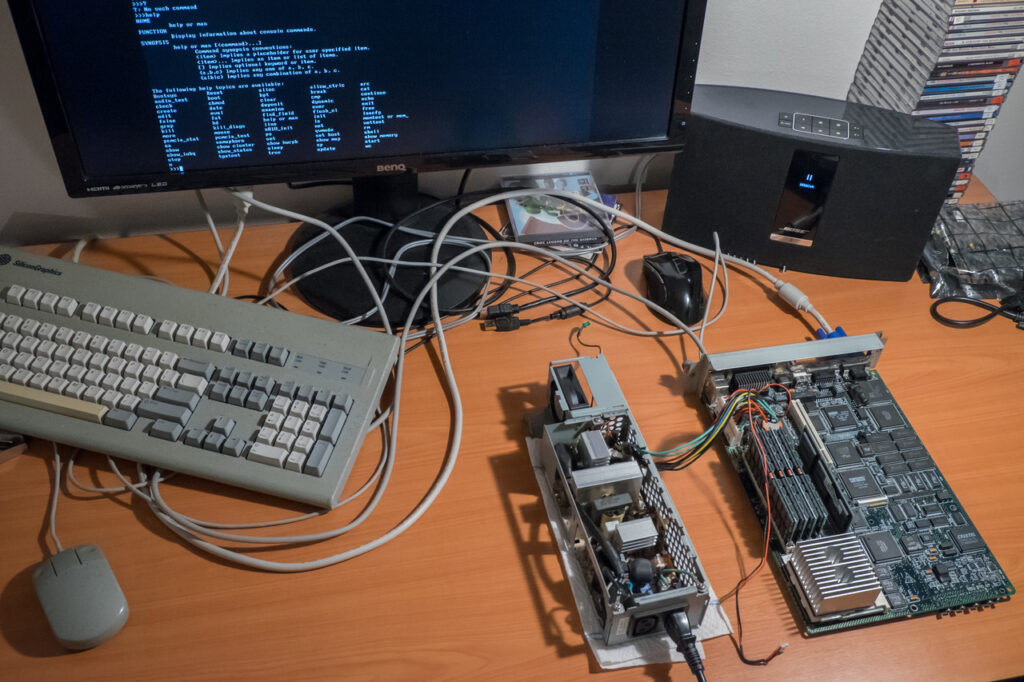

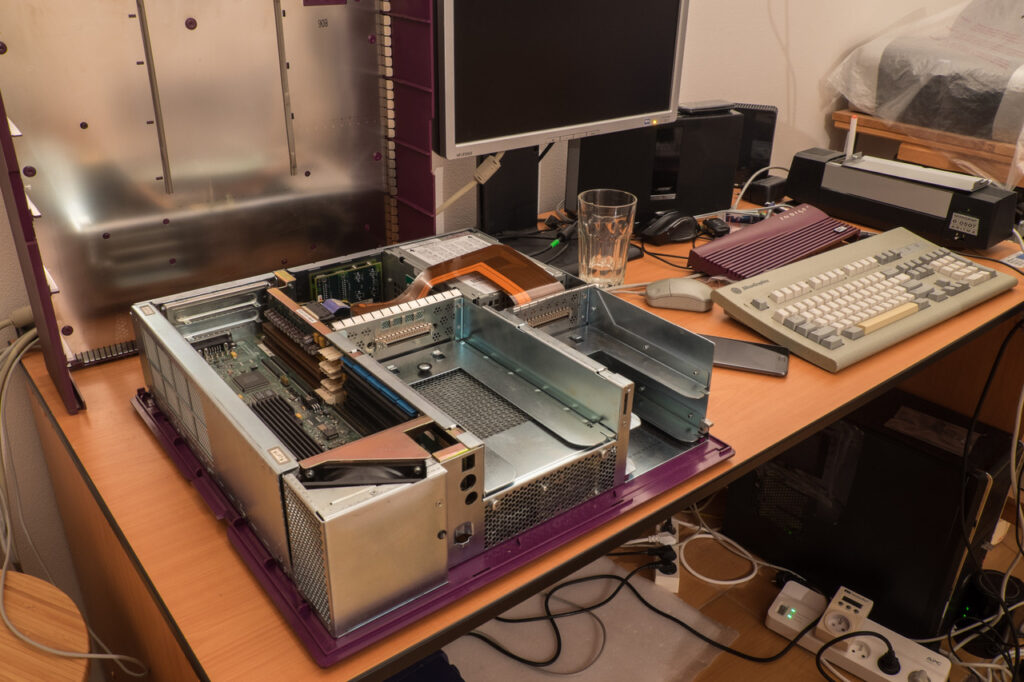

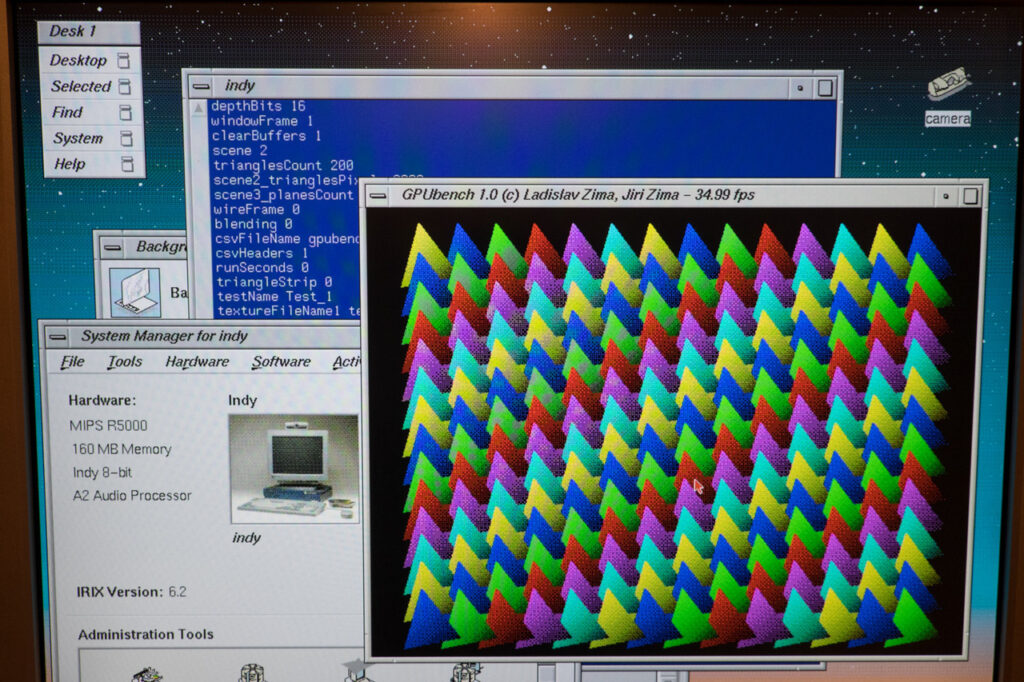

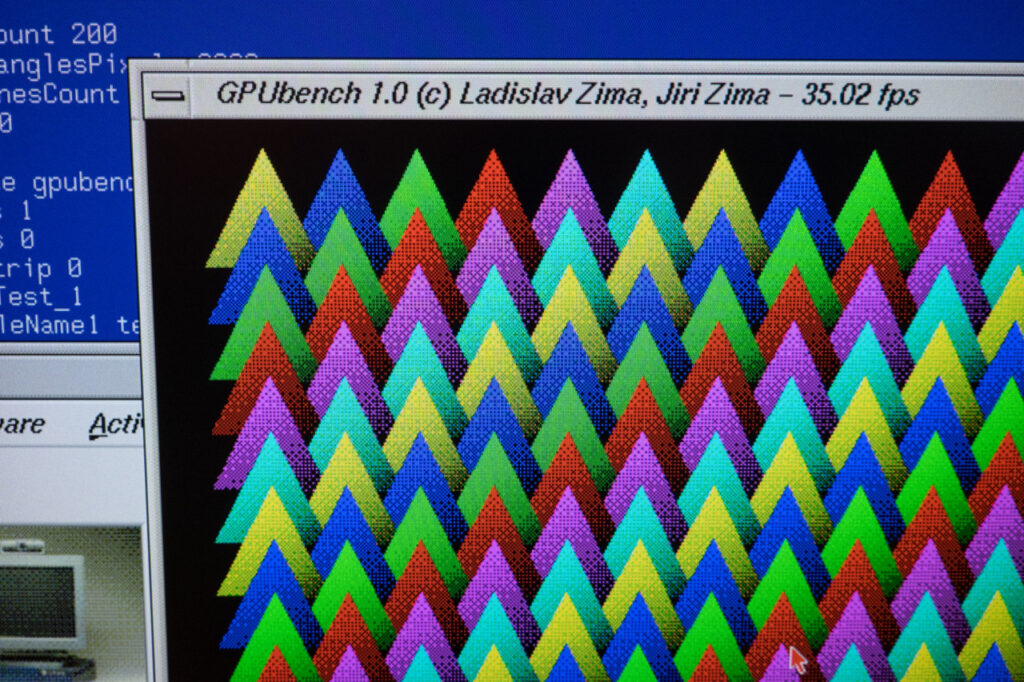

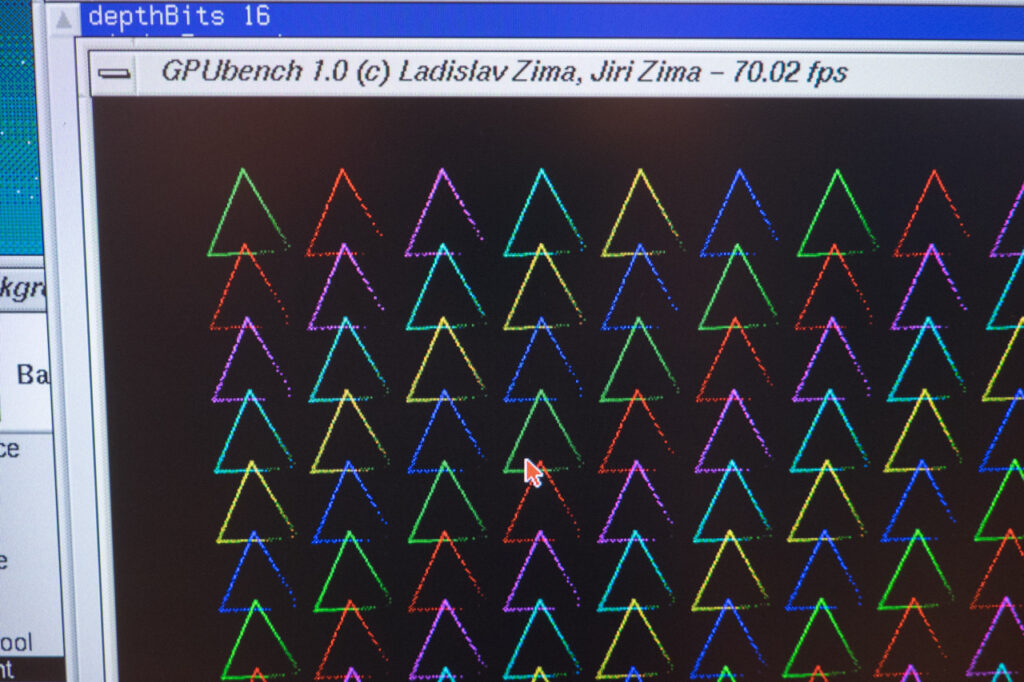

SGI Indy (1993)

This machine represented the SGI’s low-end workstation offering. It was targeted towards Mac users (DTP…) that needed more graphics and processing power than they could get from Macintosh Quadra systems. It’s a sleek pizza-box computer with just a single quiet fan inside (unlike other SGI systems). However, in comparison with non-SGI competitors, it was not slow. It had at least 100-MHz (64bit) MIPS processor, at least 16MB of RAM (reasonable configurations started with 32MB) and multiple graphics card options available. 10Mbit/s LAN, ISDN modem and video inputs (composite / S-Video / a digital port for the bundled webcam) were integrated on the logic board in all configurations as standard.

My Indy is from 1995 and has a more powerful 150MHz MIPS R5000 CPU. On the other side, it is equipped with the lowest possible graphics card (XL8/XGE8/Newport) that supports no more than 256 colors and was introduced with the early machines.

I always thought that Indy was the only SGI system without any 3D acceleration when sold with XL8 (2MB of 64bit video RAM) or XL24 (6MB of 192bit video RAM for true color modes) graphics cards. I expected just a crappy framebuffer (with BitBlt) and nothing more – like in Sun and HP machines. I was wrong. The REX3 chip inside the Newport graphics is pretty capable. Although all the 3D transformations and triangle setup are done in software, the chip can raster triangles with smooth (Gouraud) shading and per-vertex alpha-blending. Even Z-Buffering is partially accelerated using the chip (Z-Buffer is stored in system memory though).

In fact, this chip is not very far from early PC 3D accelerators (1996-1997) in terms of functions… except for the texturing support which was not available even with higher-end workstation-class 3D accelerators in 1993. This is for the first time I see 3D accelerated OpenGL (1.0) on such an old graphics card – and in 256 colors. To be correct, the scene with triangles has just 16 colors because any real-time graphics requires double-buffering. Each byte of the window in video memory contains one pixel from both buffers in the GBRG-GBRG arrangement of bits.

The graphics card is faster than I would expect. The pixel fill rate for smooth shaded triangles is ~50Mpix/s. If you add alpha-blending, you will get ~20Mpix/s. That’s 5-20 times as fast as the Windows NT 4.0 software renderer on a laptop with 133-MHz Pentium MMX and a 2D-only graphics chip. The speed in 3D is more comparable with 3Dlabs Permedia, S3 Virge DX and other consumer 3D accelerators from 1996.